Copyright © 2004 The Shuttleworth Foundation

Unless otherwise expressly stated, all original material of whatever nature created by the contributors of the Learn Linux community, is licensed under the Creative Commons license Attribution-ShareAlike 2.0.

What follows is a copy of the "human-readable summary" of this document. The Legal Code (full license) may be read here.

You are free:

to copy, distribute, display, and perform the work

to make derivative works

to make commercial use of the work

Under the following conditions:

Attribution. You must give the original author credit.

Attribution. You must give the original author credit.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

For any reuse or distribution, you must make clear to others the license terms of this work.

Any of these conditions can be waived if you get permission from the copyright holder.

Your fair use and other rights are in no way affected by the above.

This is a human-readable summary of the Legal Code (the full license).

2005-01-25 19:34:32

| Revision History | |

|---|---|

| Revision 0.0.1 | 01/NOV/2004 |

| Initial version | |

Table of Contents

- 1. Network Administration Fundamentals

- Introducing the TCP/IP Model

- The OSI Model

- Relating the TCP and OSI Models

- Overview of how the TCP/IP Model Works

- A deeper look at the TCP protocol layers

- Network Layer and Understanding IP addressing

- Choosing the Class of network that you will use

- Why use sub-netting

- Summary - TCP/IP Stack

- Transferring information across the Internet

- Test the network with Ping

- Creating and using the ARP table

- Explaining routers

- Briefly on LAN 's and WAN's

- How to put an IP address onto your network card

- Packets, frames, bytes, octets and datagrams

- The network interfaces that you'll see if you run ifconfig -a

- Setting up multiple cards in one machine

- Logical and physical networks

- Plumbing a device

- Routing and using the "netstat" command

- Wrap-up

- 2. Client/Server Technology

- 3. Network Architecture

- 4. IP Address Allocation

- 5. Basic Network Configuration

- 6. Electronic Mail

- 7. Domain Naming System

- 8. SAMBA

- 9. Basic network troubleshooting

- 10. Basics of network security

- 11. Network, System and Service Security

- 12. Network File System (NFS)

- 13. Remote access

- 14. Connecting remotely to the X Window System

- 15. Connecting to an ISP

- A. Practical lab

- Index

List of Figures

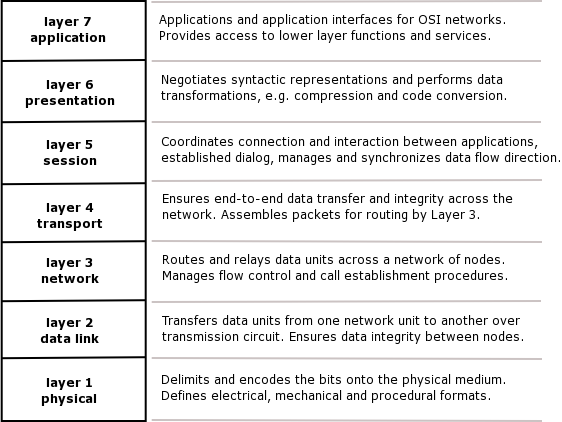

- 1.1. The OSI Model

List of Tables

Table of Contents

- Introducing the TCP/IP Model

- The OSI Model

- Relating the TCP and OSI Models

- Overview of how the TCP/IP Model Works

- A deeper look at the TCP protocol layers

- Network Layer and Understanding IP addressing

- Choosing the Class of network that you will use

- Why use sub-netting

- Summary - TCP/IP Stack

- Transferring information across the Internet

- Test the network with Ping

- Creating and using the ARP table

- Explaining routers

- Briefly on LAN 's and WAN's

- How to put an IP address onto your network card

- Packets, frames, bytes, octets and datagrams

- The network interfaces that you'll see if you run ifconfig -a

- Setting up multiple cards in one machine

- Logical and physical networks

- Plumbing a device

- Routing and using the "netstat" command

- Wrap-up

The course we doing now is really the Foundation for TCP/IP and Network administration, and essentially before we can network administration a box, (a Linux machine) we need to understand more about TCP/IP.

The entire Internet is based upon the TCP/IP protocol.

In fact TCP/IP was developed on Unix many years ago and was the fundamental building block when building the Linux networking environment.

TCP/IP stands for Transmission Control Protocol /Internet Protocol.

IP is broken up into two protocols:

TCP (Transfer Control Protocol)

UDP (User Datagram Protocol)

Essentially the IP protocol is the main protocol for transferring information across the Internet.

So before we explore how TCP/IP works, let have a look at the underlying structure of how it is supposed to work.

If we look at the TCP and the IP protocol, it is essentially built up of a stack, think of it as a stack of books, each representing a different layer (5 layers in all).

At the lowest level we have a physical Layer - often the physical layer will be Ethernet, fast Ethernet, gigabit Ethernet, it might be a Token Ring, Fiber Data Distributed Interface (FDDI) amongst many possibilities.

Essentially what that boils down to, is the cabling, the network cards, the switches, the routers what do they talk at a physical layer?

Are they gigabit devices, Ethernet devices or Token Ring devices?

This level would consist of the device driver and network interface card and is responsible for forming the packets and then transmitting them across the physical media.

This layer is referred to as the Media Access Control Layer, the Link Layer or Layer-2.

The MAC layer is responsible for the MAC address of a network card. This is a 12 byte address, commonly the hardware address of the interface card. The MAC address on my only interface card is: 00:01:03:8C:FB:01. Since any two machines can only talk between one another at the MAC level, they each need to know each other's MAC addresses in order to communicate.

The destination address of the packets are checked at this level when the correct destination is reached, the Ethernet header is stripped from the packet and it is passed to the Network layer of that system.

The network layer is responsible for IP addressing in the network. In fact, the network layer is critical to so many aspects of communication and it is where IP gets its name - the Internet Protocol. IP addresses denote the logical network as well as the address of each device on the network.

This layer has the capability of handling two protocols, the first is Transmission Control Protocol and the second is User Datagram Protocol (TCP and UDP).

TCP is responsible for breaking up the message into packets and reassembling the message at the other end. Resending anything that is lost and making sure that the message is put back together from the packets in the correct order.

UDP is designed for applications that do not require the packets to be in any specific order. UDP is therefore termed a connection-less protocol.

At this level the server provides the requested service for the client. For example, the client requests a login into the server, so the client requests a remote login and the server provides the service with the remote login daemon. The service that is provided is the login service.

Table 1.1. TCP/IP Protocols and some of the related services

| Layer | Services |

|---|---|

| Application Layer | e.g. ftp (transfer files), telnet, smtp (simple message transfer protocol), NFS |

| Transport Layer | TCP and UDP |

| Network Layer | IP |

| MAC Layer | Network device e.g. /dev/ and MAC address |

| Physical Layer | e.g. Ethernet, Token Ring, SLIP |

There are two models that we are going to look at. One is the TCP/IP model, the other is the OSI Model (Open System Interconnect). The OSI model has a total of seven layers while we have only 5 layers in the TCP/IP model. Why do we discuss the OSI model now rather than before TCP/IP- The TCP/IP model was being used in practice well before the OSI model was devised. Thus, while there are similarities in the models, TCP/IP is the model that is used.

These layers (in both the OSI and the TCP/IP models) are identical up to the Network layer. In OSI the MAC layer is the called the Link Control Layer, also called the Data Link Layer.

From layer three onwards the two models are not quite the same, primarily because the TCP/IP model was created long before the OSI model.

We are going to look at the OSI model, and then see how it relates to the TCP/IP model.

The Transport Layer is responsible for how datagrams or packets are transported from one PC to another.

Above that we have the Session Layer. What is the Session Layer used for?

If we needed to record session information, what happens to the information between key-strokes. For example, if you started off a Secure shell session between two PC's the session layer is responsible for keeping that session alive between key-strokes.

On top of the Session Layer we have the Presentation Layer, which provides the standard look and feel that is presented to the final layer, the Application Layer.

So if you are writing an application like telnet or SSH (Secure Shell) or ftp (file transfer protocol), the presentation layer is used to present the application with a standard look and feel, what they call a API, Application Program Interface.

To re-cap, in TCP/IP we only have five layers, above the Network layer we have a Transport layer and above the Transport layer we have an Application layer.

So in fact we are missing the Session and Presentation layers, completely from the IP model, yet that is not critical as IP works none the less.

You will hear a lot about the OSI model and networking, but the de facto standard is the IP model. So we will have the OSI model in the back of our minds but we are going to concentrate on the TCP/IP model.

So lets have an Overview of how this layered structure (protocol) works.

We would potentially have two or more machines and lets assume for now, that they are connected via an Ethernet network.

If this were Token Ring it would look different, but we need not be concerned about that because at this time Ethernet has replaced almost all other physical layers.

There is still legacy support (including with Linux) for the other physical layers.

We have machine A and machine B connected by a NIC (a Network Interface Card) and cables, they could also be connected to a switch.[1]

In the following example or explanation we intend to transfer a file from Machine A to Machine B.

Machine A

We start a FTP client and we are trying to connect to a FTP server on Machine B.[2]

So what happens when the client requested an ftp service from the FTP Server?

Well the Client sends a packet of data e.g. a request for a file

The packet is then sent down to the transport layer, which puts on the relevant header information and does a Cyclic Redundancy Check (CRC).

The packet is then sent to the Network layer, which adds information to the header and does a CRC.

Then to the MAC layer, which puts on some more header and CRC information.

That packet is then transmitted across the physical network to machine B (we will discuss how this actually happens later).

Machine B

From the physical layer, the request is sent to the MAC Layer and the MAC information and CRC is stripped and the packet is sent up a layer

The next layer strips of the Network information and its CRC and sends the packet up a layer.

The Transport layer does the relevant checks and then strips the packet of its header and CRC and finally passes it on to the Application Layer, which in this case is the FTP server.

The idea behind the layer approach, is that every layer is independent of the next layer, and one could potentially strip out a layer, and put in another layer in its place and still achieve the same results.

Please realize that this process is transparent to the user (the user does not know that all of this is actually happening) but in fact because of this layered approach it happens transparently.

The MAC layer controls the MAC address, and every Network Card must have a unique MAC address else there will be conflicts.

Routers, Switches and NIC's have MAC addresses.

A MAC address is a 12 digit Hexadecimal number for example: 00-a0-B3-F5-A6-FF. The first of the three sets of digits dictates who the supplier of the card is.

Every manufacturer of Network components receives a unique MAC address prefix used to identify their type of cards.

One way you can see your MAC address one your workstation is to use the ifconfig command ifconfig -a | grep HWaddr.

If you have three network cards in your workstation, you should end up with three MAC addresses.

For the moment we will skip the Network Layer, we will come back to it, since it is obviously is a critical layer.

Now I want to talk briefly about the transport layer. In IP terms there are two types of transport TCP (Transfer Control Protocol) and UDP (User Datagram Protocol)

- TCP

TCP, is a Connection orientated protocol, and this means that a connection once set up remains set up for duration of communication.

For example, Telnet, FTP and SSH are connection oriented applications. Between workstation A and workstation B a channel of communication is set up.

A real world example of a connection orientated protocol is a telephone conversation where the telephone rings you pick it up, you do a bit of handshaking with the person on the other side, as soon as the handshaking is done ( in other words Hello how are you? ) the real conversation starts. When one persons says goodbye, the connection is terminated.

Usually there is a lot more overhead and interaction involved in a Connection Orientated Protocol. Think of the phone call, you have to dial the number, wait for an answer, you have to say hello, the other person says hello so there is a lot of to-ing and fro-ing while the connection is being set up.

That contrasts with UDP where the one workstation just puts the packet on the network and is not concerned whether or not the packet reaches its destination.

- UDP

UDP is a Connectionless protocol.

An example of this would be a DNS update. Here the datagram is put on the network and no error checking is done to see whether it arrived at a destination or not.

Another example of a Connectionless Protocol is an SMS. You can send a SMS via your cellphone, but your phone has no responsibility to see that the message gets delivered, the message is just sent. Even the reply message sent by the Service center to your phone is connectionless. There is no acknowledgment that the message has been received, and in that way it is a little more unreliable. However, smaller packet sizes and lack of handshaking ensure that it is quick and efficient.

A further example would be an SNMP trap - a Simple Network Management Protocol trap. This is used when, for example a switch or a router 's power supply is lost. The device will send a message (an SNMP-trap) to say that is going down, whether the message is received or not does not matter to the device sending it.

So UDP and TCP are the two different transport methods used, and they are used for different things, Telnet for example uses TCP, SSH uses TCP but DNS Update uses UDP.

Let 's move on to the Application Layer, essentially every application is based upon either the TCP or UDP Protocol. Telnet for example is based upon a connection orientated protocol - TCP protocol, while DNS updates as we mentioned, use a connectionless protocol or UDP.

Some applications can use one or the other of these protocols, but for the most part if we take an application like FTP, the FTP client will use the TCP protocol, because it needs to set up a connection between the Source and the destination. The requirements of the application dictate the protocol that will be used.

When we discuss the Network Layer we also need to understand and discuss the IP addresses, or Internet Protocol addresses.

We have talked about MAC addresses and now we have yet another address. In talking about these two addresses, we will see the difference between the MAC layer and the Network Layer.

| MAC layer | Network Layer | |

| Address | 00:01:03:8C:FB:01 | 192.168.0.21 |

| Address type | Physical address | Logical address |

| Layer | Present at layer 2 of the IP model | Present at layer 3 of the IP model |

| Uniqueness | All MAC addresses should be unique on a single network | All IP addresses should be unique on a single network. |

| Assignment | Manufacturer assigns the MAC address in the factory | Network administrator assigns the IP address. |

The Network layer identifier is not decided by a manufacturer, it is usually decided by the Network Administrator, and the identifier does not look like the MAC address layer, as it has four sets of digits 1.2.3.4 (separated by full-stops) a typical IP address would be 192.168.14.1

There are two versions of IP the TCP/IP protocol: IP version 4 and IP version 6. IP version 6 is much more complicated than IP version 4 and is much newer. We will be working with IP version 4 which is the address format of the four digits separated by full-stops.

If two devices want to talk to each other they each would need a unique IP address to deliver information.

If we look at the model network we used before, and set the IP addresses as follows:

Workstation A 196.6.23.14

Workstation B 196.6.23.17

|

These addresses uniquely identify the workstations.

Let 's look at the IP address in more detail, it is split into two sections, the first part is the Network part of the address, and the second part is the host part.

We are going to delve a bit deeper into how this IP number is made up in order to understand these different classes.

Each number is made up of 8 bits. If we use the IP address above, then we have 4 numbers, each number comprising 8 bits - giving us a total of 32 bits that make up the IP address. If we make all the bits 1 (binary), that number would be 255 (11111111), but making it 00000011, the number would be 3.

Now if we changed these bits, and made one of them a zero that would be 128 - 01111111 A byte of data has a "most significant bit" (MSB) (left-hand bit in the examples above) and a "least significant bit" (LSB) (the right-hand bit in the examples above) and I am going to work with the MSB as always being on the left hand side.

Our bit configuration for 196 is 11000100 that is 128 + 64 + 4 = 196

On the Internet we have three classes of IP addresses, class A, class B and Class C.

There are different classes of address groups - a class C group would include any IP address that starts with 110, (where the MSB is 110). This would mean that 11000000 is a class C address - this address works out to be 192.

If we take the address 196.6.23.14, this would also be a class C address because the first three bits are 110.

Right now do not worry about what comes after the first three bits, we are not really interested in them now to determine the class of the address.

Class C IP addresses would occur in the range of 192 to where the MSB is all 1 's - 11100000 (224). So class C addresses are in the range:

192 - 223

|

For a class B address the first two significant bits must be 10 (this is in binary not the decimal ten). If we took an address of 10000000 which would be 128, this would be a class B address.

Using an IP address of 132, would that be a class C or Class B address- Well 132 = 128 + 4. The number 132 translated into binary would be 100000100 and this matches our criteria of the first two bits being 10 - a class B address.

Class B IP addresses would occur in the range of 128 to where the MSB is 10111111 (191).

If I gave you an IP address 141.15.23.64 would that be a class B or a Class C address- Well it falls in the range 128 to 191 meaning that the MSB of the IP address must be 10 and if we change 141 to a binary number we would see that the MSB of it is 10. So it must be a class B address.

If I gave you an address of 206.15.23.10 would that be a class B or a class C address. Again it falls in the range of 192 and 223 so it must be a class C address.

Finally an address of 191.15.23.10 falls in the range of 128 to 191 so it would be a class B address. (191 is inclusive) So that is a class B addresses. Finally we look at class A addresses.

Class B addresses are in the range:

128 - 191

|

Class A addresses is quite simple, it says the MSB must be a zero so it means the range is from 0 (where all the bits are zero) to 128 (where all the bits are one). So as long as the first bit is zero, you have a Class A address. Realistically it is from 1 to 127 (inclusive).

An IP address 141.15.23.64 would that be a class B or a Class C address? It falls in the range 128 to 191 so that means the MSB of the IP address must be 10 and if we change 141 to a binary number we would see that the MSB of it is 10, this is a class B address.

If I gave you an address of 206.15.23.10 would that be a class B or a class C address, well it falls in the range of 192 and 224 so it must be a class C address.

If I gave you an address of 191.15.23.10 it falls in the range of 128 to 191 so it would be a class B address. (191 is inclusive) So that is class B addresses.

If I gave you an IP address of 18.23.15.10 you could tell me immediately it is a class A address since it falls between 1 and 128. Transform 18 to binary would be: 00010010, which satisfies the Class A rule, the MSB is 0. Which means it is a Class A address

So we have looked at the Most Significant Bits, but what about the rest?. Well we can translate these too. Using the address 196.6.23.14 we would end up with a bit as follows:

11000100 . 00000110 . 00010111 . 00001110

|

Right so, if we look at this address, we see that it begins with 110 meaning that it is a class C address, and a Class C address generally has the first 24 bits as the network portion and the remaining 8 bits as the host portion. In other words the first three bytes is the Network portion, and the remaining bytes are the Host portion.

The Network portion essentially uses the first three bytes (obviously this has to do with the subnet mask, but we'll talk about this shortly). Therefore, from our original IP, the network portion would be 196.6.23 and the host on this network would be host 14.

Now if you take a look at this, you will immediately see that we could only have up to 256 hosts on this network, why is that? It is because the host bits can range from where they are all zero's (00000000) to where they are all 1's (11111111).

A couple of things to note here. Because of a legacy the address 0 and address 255 are out of bounds. We can't use them for host addresses. These are called the broadcast addresses. This means that out of the possible 256 hosts on the network 196.6.23 we actually only have a total of 254 addresses that can be assigned to hosts.

That is fair enough, but what if you want to have more than 254 hosts on this network?

If you look at a Class A network address for example, it is defined by the first 2 bits of the first byte. The first byte makes up the network portion of the address, while the remaining 3 bytes make up the hosts portion of the address. With this in mind, an IP address of 10.20.30.40 would have the host 20.30.40 on the network 10.

If I gave you an IP address of 152.111.16.10, using our rules, 152 falls into the B class. The B class has the first two sets of bytes in the network portion and the remaining two sets of bytes in the host portion. With this IP address, this would be a host 16.10 on a network 152.111

In Class A the MSB has to be 0 which means the address ranges form 1 to 127 in the first byte, and also where the first byte represents the Network portion, and the remaining three bytes represents the Host on the network. Now if we look at that we can see that on a Class A Network you can only have 128 Networks but you can have a huge number of hosts on every network, how many? You can have a total of 224 hosts on 128 Networks.

In Class B the MSB must be 10 and the range from 128 to 191 and here the first two bytes represent the network and the remaining two bytes represent the Host. In class B we will have 216 Networks and each of those networks can have 216 hosts.

In Class C the first three bits of the MSB is 110 which means the range is from 192 to 224 and for that the first three bytes are the network portion, and the remaining byte is the host portion. And on class C you can have 224 Networks and 28 hosts per network.

Since we are only working with a total of 4 bytes, as the number of hosts increases, the number of networks decrease and visa versa

20 years ago any one of these options would have given most companies enough addresses to work with, but that of course was before the Internet took off.

Now every machine that connects to the Internet needs an IP address. This was do-able 12 years ago but now that more and more devices are Internet ready, and with the growth of Linux and embedded Linux devices, we will see more and more Intelligent devices such as:

Cell phones that connect via GPRS must have an IP address.

Already they are advertising a fridge that connects to the Internet, later on there will be stoves, dishwashers, toasters etc. that will connect to the Internet and each of these devices will need a unique IP address.

A lot of people are now building "Intelligent houses" where the intercom is connected to the network, where the network accommodates a lot of CCTV cameras or the sprinkler system, and each of these would need an IP address.

As you can see very quickly we will exhaust the number of available IP addresses.

There are a couple of ways that they (InterNIC or IANA - the authorities who hand out valid IP addresses) decided to solve this problem as they couldn't go on handing networks in the class B range, because there were not enough network addresses to go around. So what they did is to come up with a means of sub-netting a range if Internet addresses: sub-netting is a means of chopping up an IP range. In essence, sub-netting involves moving the host and network bits and in this way making the ranges change accordingly.

Without understanding sub-netting you will never be able to tell which network a particular host is on.

I made the assumption a couple of minutes ago, that on a class B network half of the bits make up the network portion and the other half of the bits make up the host portion. That is assumed that a netmask of 255.255.0.0 is used.

Let 's take a further look at the Netmask - the above netmask translates to:

11111111. 11111111. 00000000. 00000000 |

Now what we are going to do is to add the bits from the subnet mask to the bits from the original address. If you do not know about adding bits together, I would suggest you go look at the Shell Scripting Course.

Adding 1 and 1 together will give you a "1", anything else would equal "0". Let 's look at an example:

11000100. 00000110. 00010111. 00001110 (196.6.23.14)

11111111. 11111111. 00000000. 00000000 (255.255.0.0)

----------------------------------------------------------

11000100. 00000110. 00000000. 00000000 (and result)

|

All the bits that were "1" in the network portion seem to just "drop through" as ones in the result, and what we end up with is 196.6.0.0

This shows us immediately that the first two bytes are the network portion and the last two bytes are the host portion.

We have a host 23.14 on network 196.6, applying the mask tells us what our network is.

Now, you might say, that is fine and dandy, but how does that help us?

If I gave you the address 196.6.15.3 is that on the same network as 196.6.23.14?

The answer has to be yes.

If we mask 196.6.15.3 with 255.255.0.0 we end up with the network of 196.6 so this particular IP address is on the same network as 196.6.23.14.

If I gave you 196.7.15.3 would that be on the same network as 196.6.15.3?

Clearly not, because when you ADD this with the subnetmask, you would get the network of 196.7 and the host 15.3 on network 196.6 which is not the same as the host 15.3 on the network 196.7.

OK, fair enough what happens when instead of applying a mask of 255.255.0.0 I apply a mask of 255.255.252.0?

Well now things become more interesting, because what I am doing is stealing two bits from the network and giving them to the host, and this means I am reducing the number of networks and increasing the number of hosts available.

What is 255.255.252.0 in binary? It is:

11111111. 11111111. 11111100. 00000000 (255.255.252.0)

|

Remember that I have given you the same IP address of 196.6.23.14 but this time I have given you a netmask of 255.255.252.0. Lets have a look at the result.

11000100. 00000110. 00010111. 00001110 (196.6.23.14)

11111111. 11111111. 11111100. 00000000 (255.255.252.0)

--------------------------------------------------------

11000100. 00000110. 00010100. 00000000 (result of AND) (196.6.20.0)

|

This works out to be 196.6.20.0, so now our network is 196.6.20 and our hosts can range from those where all the host bits are 0, to those where all the host bits are 1.

Therefore the hosts range from host 0 on network 196.6.20. to host 255 on network 192.6.23. Now, the hosts 196.6.21.145 is on the same network as host 196.6.23.9. Given the fact that every IP address is made up of an IP address plus a Netmask, we then should not ever talk about one without talking about the other.

If I gave you the IP address of 162.15.45.155 I will almost invariably give you a netmask of 255.255.0.0, if I want to be correct, because this is a standard Class B network address and this is the standard Class B Netmask.

Again If I gave you the address 14.186.99.203 then I would almost invariably give you a Netmask of 255.0.0.0 because this is the standard Netmask for Class A networks.

Lastly if I gave you the address 206.19.193.254 then I would give you a Netmask of 255.255.255.0 since this is the standard Class C Netmask.

Type:

ifconfig eth0 [enter]

|

If you have assigned that IP address to the particular NIC you would see the IP address and a Netmask, and the one should never be mentioned without the other, because the Netmask determines which network this particular address is on. It determines what the network and the host parts are.

We will talk more about networks later on, when we talk about routing.

So if we return to our five layer stack, you have the physical layer at the bottom followed by the MAC, the network (commonly called the IP), the transport which is usually connection orientated (TCP) or connectionless (UDP) and finally the application layer.

We have now had a look at the IP layer and note that every single device on the Internet needs a unique IP address in order to communicate with the other device(s).

Having now understood IP and the fact that everything needs an IP address. We can now talk briefly about how information is transferred over the Internet.

What happens when workstation A wants to talk to workstation B?

They have the following IP addresses: Workstation A is 196.6.23.14 and Workstation B is 196.6.23.24

Workstation A does is a broadcast. In other words it broadcasts to every other machine on the Network, with what is called an ARP (Address Resolution Protocol).

It does an ARP and basically what that is, is a broadcast over the network, saying "I want the IP address of Workstation B, but before it can get it 's IP address I need to know what the MAC Address of workstation B"

So by workstation A doing a ARP request, it forces all the workstations on the Network to respond by giving up their MAC addresses. All the workstations on the network respond to this request and Workstation A builds an ARP table.

An ARP table will hold all the MAC addresses and hopefully all the IP addresses of everybody on the network.

Testing whether two hosts on a network are alive and can see one another is a fundamental network test. This is done using the Packet Internet Groper command (ping).

Ping 's job is to send an echo request and await an echo reply from the remote host. Thus, two workstations A and B, on the same network can both send and receive echo packets. If workstation A sends an echo to workstation B but does not get a reply, it could mean the remote hosts (workstation B) did not receive the request. Pinging is so fundamental to the troubleshooting of networks that we need a whole section to discuss it. That section appears later. Now however, we can use ping to broadcast to each workstation in order to fill up our arp table. Remember that each time a broadcast request is transmitted over the network, all hosts on the local network will respond to the broadcast. The overall result is that each workstation will have a complete list of hosts on their local network.

ping -b 196.6.255.255 [enter]

|

You have to be root to use this command.

Note that we ping the broadcast address. If you are not using this IP address range, use ifconfig to determine what your broadcast address is.

What this does is to ping every workstation, and every workstation that replies will have to reply with its MAC and IP address. Once you have run this command you can type:

arp -a [enter]

|

This would show all the information in the ARP table.

Once Workstation A has built up an arp table including the information of what workstation B 's MAC address is as well as workstation B 's IP address, Workstation A can start to communicate with Workstation B. They may need to communicate over a connection-orientated protocol like TCP (for something like the 'telnet' application) or perhaps using a connectionless protocol like UDP for DNS updates.

ARP is really a UDP based service, because it does a broadcast by sending out a packet on the network expecting devices to reply. ARP is not interested if the workstations on the network receive the packets. If you Sniff your network (we will talk about that later) you will see these ARP requests being sent out continually by hosts on your network.

The explanation above assumes that everybody is on the same network. We know this is not the case, so we also need to establish the concept of the differences between layer two and layer three networks.

Essentially at Layer Two workstations are only communication at MAC address level. Therefore, while two machines are physically connected to the same local area network (LAN) they can communicate.

Now what happens is that networks were designed to connect computers together, in the simplest case we started off with a network and put some machines on the network.

These machines were able to communicate with each other without any interference, but what happened next is that we found that we had a need for a second network. It might be on another floor in the same building or in another building. The machines on the second network could talk to one another however they could not talk to machines on the original network.

Routers were developed to deal with this problem. Their job is to route packets via different networks. You could think of the router as a traffic policeman on a busy intersection. When you come along road A and you want to go to road C the traffic police(wo)man will direct you down road C. She (traffic police(wo)man) stands in the middle and when she sees you coming along with a big sign on your windscreen saying you want to go to road C, she immediately stops other traffic and directs you to your destination. Routers do a similar job - only with packets rather than cars.

Now what happens here is that Workstation A wants to talk to Workstation E and these are on completely separate networks - not directly connected to one another. An example might be doing an ftp to ftp.is.co.za . In all probability, your host will not be directly connected to the hosts ftp.is.co.za.

Workstation A looks for workstation E in it 's routing table, hoping to find a host-address entry for this hosts. However this host is not in workstation A 's routing table.

Next the routing table is examined for a match of networks with the destination network. Clearly, if there is an entry here for this network, it would mean that the network would be directly connected.

But alas, there is no entry, so the network containing workstation E is not directly connected. Finally, the routing table is consulted for a default gateway. In this case, the default gateway indicates a host on your directly connected network that acts as the "go-between". When all else fails, your packets are sent to the default gateway. Of course, your host may not know the MAC address of the gateway and will thus have to send an ARP broadcast to locate the MAC address of the default gateway.

Now when we talk about a network, we are not talking about the MAC layer, we are talking about the Network Layer or the IP Layer.

You may have a 196.6.23.x network (netmask: 255.255.255.0) as well as a 147.63.15.x network (netmask: 255.255.0.0).

Now these are two completely separate networks, one is class B the other Class C, and the router is able to route packets between the class B network of 147 and the Class C network of 196. The router 's job is to direct packets.

Now you can imagine if everybody pulled out a loud hailer and started broadcasting it would become a pretty noisy place quite quickly. How do we stop that? Well in networking terms there are not a lot of ways we can stop that.

There needs to be a way to stop these broadcasts from passing from one network to the next. The way that this happens is to force the router to block all broadcasts. So if the router hears a broadcast coming through, it will immediately drop that packet and not allow the transmission of the packet across to the other network.

You can imagine that it would become very noisy if every time somebody sent out a broadcast it was allowed to be transmitted to all networks. How often in the real world does somebody pull out a loud hailer? Well generally at election time when they want to gather some support.

In networking terms computers broadcasts every couple of seconds, they start shouting about who they are and who else is on the network etc. Etc. As you can imagine, this will cause a lot of traffic on the network for no apparent reason. So routers restrict the broadcast domain to the local network.

Broadcasts can only happen on the MAC layer and any broadcast that tries to go across the router will be dropped. One of the things you may want to broadcast across a router (and you can configure a router to allow certain broadcasts) is DHCP (Dynamic Host Control Protocol). However, we will talk about this later.

To summarize routers are responsible for routing Internet Protocol traffic at the Network Layer, from one Network to another. This contrasts with switches or bridges, where bridges work on the MAC layer, and thus have no way to restrict broadcasts.

If a workstation on Network One starts broadcasting, the workstations on network two will be able to receive and respond to the request. Bridged and switched networks are termed "flat" networks and there is little barrier from hosts connected on one switch to another.

OK so we have Routers that allow networks to be connected, and we have switches that create flat networks where you cannot create separate networks on a switch.

We will configure our Linux boxes later on to act as routers. This is an area that Linux has gained acceptance, where you are using your Linux Box to become a router between yourself and the Internet.

If you have three PC 's but just one modem Linux allows modem sharing and has done so since 1993. Windows now also allows modem sharing (ICS) which essentially allows the system to act as a router.

A LAN is a Local Area Network and a WAN is a Wide Area Network.

These barriers seem to be crumbling as we speak. Traditionally LAN's were restricted to a building. A typical example of this is a building that has a number of floors all connected together. The way these floors are connected is via a router in the basement. Thus, each floor has a link to the basement where a large router connects the floors together.

Essentially even though these different networks are routed they are still considered to be Local Area Networks, because they are limited to a building. The minute you go from a single building to connecting two buildings, (you may have a wireless link between the two buildings) you are creating a Wide Area Network between these two buildings.

WAN 's are generally considered to be those networks running over low bandwidth expensive links. Consider the price per packet of transmitting data over a WAN, it would be significantly higher than that of a LAN.

If you look at the speed you get over a WAN versus that of a LAN, you will see that a LAN will be significantly faster than a WAN - although this gap seems to be closing too.

Currently, for example, on a WAN over a wireless network you can currently get up to 54 megabits per second. Whereas on a LAN you can get up to a 1000 megabits per second.

In this Networking course we will look mostly at LAN's. With one exception: connecting the Linux system to an ISP. The minute you connect your Linux system to an ISP you create a WAN.

With our basic knowledge of TCP/IP we now need to put an IP address/netmask pair onto our network interface card.

Presuming we have 2 workstations; on Workstation A we are going to put an IP address of 192.168.0.1 and on Workstation B an IP address of 192.168.0.2

The subnetmask is going to be a standard Class C subnetmask (255.255.255.0).

What that means is that both these workstations are on the same network (you can do the calculations yourself).

Now the question is - how do you configure your IP address?

Unlike other operating systems you can configure a lot of your Linux operating system on the fly (you will probably have come across that as you worked through these courses), but configuring on the fly has some advantages and some distinct disadvantages.

One of the advantages of being able to configure Linux on the fly is that you can change things without having to go through reboot process and that makes it quick and easy, cheap and dirty. The problem is that if you configure on the fly you can often end up with a system that works fine until its rebooted at which point things aren't the way you think you set them up.

We could, for instance run "ifconfig eth0 192.168.0.1 netmask 255.255.255.0" which would configure our Ethernet 0 interface.

![[Note]](../images/admon/note.png) | Note |

|---|---|

If a netmask other than the default one for this class is used, the ifconfig command MUST specify the broadcast address too. For example if we choose a subnet mask of 255.255.252.0, then we should specify the ifconfig command as follows:[3] | |

ifconfig eth0 192.168.0.1 netmask 255.255.252.0 broadcast 192.168.3.255

|

Run "info" on ifconfig to learn more about this command.

Basically the ifconfig command sets an IP address onto your Ethernet 0 interface.

Once that Ethernet 0 interface has an IP address run "ifconfig eth0" and you should see the following:

The hardware address and that is the MAC address as we discussed earlier in the course.

An IP address and that should be 192.168.0.1

As part of the command netmask, which as we stipulated above is standard Class C netmask

A broadcast address. We stated earlier that the broadcast address is where all the host bits are 0 or 1 and if we look at our broadcast address now we should get 192.168.0.255.

And finally we should see some stats on packets transmitted and received.

In your test network you are going to have to configure, one IP address per workstation, and in the example just done we have already configured Workstation A.

On Workstation B you are going to run a similar command, but instead of 192.168.0.1 it is going to be 192.168.0.2. Testing the set-up with ping

The first thing you're going to want to do now is see if these two machines respond to one another, and the best way of doing that, is to use the packet Internet groper or the ping command

Assuming that you are sitting at Workstation A you might want to ping 192.168.0.2, which is Workstation B.

You may need to understand a little bit about the ping command in order to understand what you are testing. When you ping something it sends a 64 byte packet (echo request) to the other machine that you are setting up, in our case, Workstation B. As soon as Workstation B receives the packet, it replies with an echo reply by sending a 64 byte packet back to workstation A.

When we do a ping we are sending a packet called an ICMP packet, and that stands for an Internet Control Message Protocol.

The ICMP originates from within the network layer.

Look at our two machines and the network layer and the MAC layer. The packet originates at the network layer it proceeds down the stack as we have seen, onto the physical network and then reaches its destination and moves up the protocol stack but it does not go all the way up to the application layer it only goes to the ICMP layer - which is a sub-layer within the network layer.

When the packet is received by Workstation B it is then sent back to Workstation A in the same manner through the stack.

To repeat the process: When you ping something you send a 64 byte packet, a 64 byte ICMP packet from the network layer to the MAC layer, to the physical layer which then goes to Workstation B. The packet then goes up from the physical layer to the MAC layer, from the MAC layer to the network layer and when the network layer receives this package, the packet, it replies.

Ping is a good way of testing whether you get connectivity between two workstations but unfortunately it is not necessarily an end to end service indicator. The reason for this is that an ICMP packet never reaches the application layer, so although you can ping two machines it doesn't mean to say that you can send email between those two machines.

At some higher layer (perhaps at the transport layer or the application layer) the protocol could actually break down and therefore there is no end-to-end connectivity from an application perspective.

So ping is useful and you can use it for a lot of different tests but not necessarily an end-to-end service test and in modern computer terms this is really what people are interested in.

OK, so, once you've got your machines connected and you can see them and you can ping between one workstation and another, you should see that you get a reply for every packet that is sent. We will investigate the ping command in a little more detail shortly, because it is certainly your first port of call when trying to fix problems on a network.

You will hear, in your walk through this world of networking and TCP/IP people referring to packets and frames, octets and datagrams. What is what? In the strictest sense packets and frames are different and datagrams are different again. However, many people refer to these interchangeably. Let 's clear up the definitions here.

When a unit of data, beginning at the application layer (top layer) is transmitted to lower layers, headers and trailers are appended to it. Once the unit of data reaches the IP layer and source and destination IP addresses are added to it, it then is known as an IP datagram. The unit of data, feeling a little bloated from having all this extra baggage is then sent to the link layer where source and destination MAC addresses are perpended to it. Now it is called a frame - and more accurately an Ethernet frame (if the physical layer is Ethernet). To be precise, the unit of data passed between the IP layer and the network interface card is really a packet of data. Thus, a packet can be an entire IP datagram or perhaps a fragment of an IP datagram.

UDP units of data, being connectionless (and thus not really too concerned whether the receiver actually got the data or not) are also referred to as datagrams.

This leaves octets. Octets are units of 8 bits (also know as bytes). Thus the maximum size of a frame in Ethernet is 1513 octets or 1513 bytes. Any larger and the frame must be split or fragmented. As you might expect, fragmentation causes delay so it is good to keep all your frames below the MTU size.

ifconfig -a

|

You would see a minimum of two interfaces, we expect we are going to see Ethernet 0 because we have just configured an IP address on it. Additionally, we will see an Internet address called "lo" and this is the loop back interface. Every network device be it a switch, router, server or a hub, will always have a loop back device associated with it.

And this loop back device will always have the address 127.0.0.1.

Now when we were doing the IP addressing we said that the

Class A networks could range between 1 and 127.

Class B networks could range between 128 and 191.

Class C networks could range between 192 and 224.

Here we have an address 127.0.0.1 - clearly this is an Class A address.

Your loop back interface can never have any other IP address than 127.0.0.1, which means if you are setting up a network it would not make sense to allocate to Ethernet 0 a 127.0.0.1 address because on every network one must have unique IP addresses.

So allocating an IP address of 127.0.0.1 to Ethernet 0 will conflict with the loop back address, which has also got the address of 127.0.0.1.

IP address of hosts on the same network must be unique.

Having said that there is a technique called network address translation or NAT. NAT allows us to translate from one IP address to another, but now is not the time to discuss that.

You can have multiple network cards in any UNIX system and that includes Linux so one would not necessarily need to have one network interface per machine.

One could quite simply set up a network where you have two interface cards per workstation, then you can assign different IP addresses to these different interface cards.

In the notes I have also assigned IP addresses 172.16.5.1 to Workstation A and 172.16.5.2 to Workstation B.

Even though these two workstations are physically connected to same switch or hub and the physical network may be single network but the logical network is a completely separate issue.

On one logical network we have 172.16.5 as our network, and the other logical network is 192.168.0.

We have a logical network and physical network and it is important to distinguish between the two, even though share the same physical hub, they logically are on completely separate networks

These two machines, or these two logical networks would be incapable of talking to one another and we would need to put a router between them in order to get them to talk to one another.

The final thing that we are going to look at doing with the ifconfig command is what is known as plumbing a device.

Plumbing a device or plumbing a network means putting two IP addresses on one link.

In a sense what this looks like is, that on an interface you can have 192.168.0.1 on eth0 and on eth0:0 you can also have 172.16.5.1.

This would allow us to have a single Linux machine to act as a gateway between two different networks. If we draw this out logically we will have networks 192.168.0 and 172.16.5, and in the middle we will have our Linux machine. This will allow us to connect two completely separate networks and force our Linux machine to be the router.

If we take this to our logical next step, when you set up your Linux machine at home and you connect your modem to it you are essentially doing exactly the same thing; turning your Linux machine into a router. The internal eth0, (your Ethernet card) might have an IP address of 10.0.0 5 and your ISP, Internet Service Provider, might give you a separate IP address of 196.6.25.15, and the only way you can communicate between other hosts internally on your LAN and the Internet, is by forcing your Linux box to be a router.

How many times can we plumb an interface?

Well certainly more than you are going to need to, but I think the maximum limit is 255.

Linux can quite easily be used as a router, equally it can be used as a packet shaper or as a means of creating virtual LAN's or VLAN's, but this is beyond the scope of this course.

To plumb your device, you may run the command:

ifconfig eth0 192.168.0.1 netmask 255.255.255.0

ifconfig eth0:1 172.16.5.1 netmask 255.255.255.0

|

These commands would plumb the interface. Notice that the eth0:1 gives the interface the second IP address. Using any number in place of the '1' would also work.

In order to understand routing on our host we are going to need to use the netstat command.

netstat can do all sorts of things, but probably the most useful thing is the -r option and that will show us the routing tables.

If you run the netstat -r command, after you have plumbed your interface, you should see at least the following:

linux:~ # ifconfig eth0:1 172.16.5.112 netmask 255.255.255.0

linux:~ # netstat -r

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

172.16.5.0 * 255.255.255.0 U 0 0 0 eth0

192.168.1.0 * 255.255.255.0 U 0 0 0 eth0

default 192.168.1.1 0.0.0.0 UG 0 0 0 eth0

linux:~ #

|

You should see a table and in your destination you should see 192.168.0.0 which would be the network. The gateway would be 0.0.0.0 the mask would be whatever the mask was set to, in this case a Class C mask, the flag will indicate a "U" to show that the network is up and the iface should indicate eth0

You should see an entry for the 172.16.5 network and finally if you've got a default gateway set you should see an entry called 0.0.0.0 (or whatever your gateway was set to).

The difference in this line is that your destination will be 0.0.0.0 which indicates the default gateway.

Remember that the default gateway is the place we send network traffic in the event that we don't know where else to send it.

The default gateway is currently up (indicated by the 'U' flag) but the flag also indicates a 'G' which shows that it is a gateway and the interface is eth0. Our routing table tells us where and how to route information around the network.

Now we know how to look at our routing table, we will also need to know how to add routes to it. Most importantly, we will need to add a default route to our host. Again, like configuring the IP address on-the-fly we can configure the default gateway on-the-fly too using the route command:

route add default gw 192.168.1.1 netmask 255.255.255.0

|

Consult your routing table again to verify that this is indeed the default gateway now. Not only can we add the default gateway, we can also add networks that we may know about. Assume for a minute that there is another network 10.121.20.x to which we can gain access, but not directly. In other words, we have a host through which we route in order to gain access to this network. Assume too that this hosts is on our local network, with the IP address 192.168.1.100. Now we can add a route to our network to indicate to our frames (or packets) how to get to hosts on this network (10.121.20.x). We could do this as follows:

route add -net 10.121.16.0 netmask 255.255.248.0 gw 172.16.1.1 metric 1

|

Note here we need to provide the route with the correct network address for the netmask that we are supplying [head back to your ipcalc program to verify this]. The gw (gateway) indicates the host that will accept frames on behalf of this network and the metric indicates how many hops we will need to do prior to getting on this network.

So much for on-the-fly configuration. Setting up your networks and especially your default gateway on your hosts permanently, you need to edit a file. On RedHat Linux (and Fedora) you will need to edit the /etc/sysconfig/network-scripts/ifcfg-eth0 and add the word GATEWAY=<your default gateway>. On Debian, this is configured in the interfaces file in /etc/ and on SuSE it is configured using YaST.

The there are a couple of things we need to wrap up when talking about networks

CIDR (pronounced cider), or Classless Internet Domain Routing is another way of expressing our network subnet pair, network netmask pair.

We may have an IP address 192.168.16.65 with a class C network 255.255.255.0 or we could express this as 192.168.16.65/255.255.255.0..... this is quite a mouthful.

CIDR is just another way of expressing the same thing.

You can express the IP address/subnetmask combination as 192.168.15.65/24.

What does 24 mean? It means 3 sets of 8 bits (24 bits on total) where each bit in the byte is a '1'. So if we were using a 10 network 10.25.48.132 and we used "/8" at the end that would tell us we are using a class A network using a class A subnetmask 255.0.0.0

CIDR is just a very easy way of expressing this.

What I want to look at is troubleshooting your network. We do not know enough about networking to be complete gurus but we need to be able to at least troubleshoot any problems that we may have.

We have seen already that we can use the ping command. Now ping tells us more that a device was able to receive and reply to packets sent. It also tells us about reachability. Reachability is one of those criteria or stats that people want to know about their network - can this device be reached on the network.

The second thing it tells us is about delay or latency.

If you look at the right hand column of the ping command you'll notice that it tells us the delay in the packets reaching their destination and returning (usually in milliseconds).

So if you run the command ping -c 10 192.168.0.2 supposing that you are sitting at 192.168.0.1 or Workstation A. There will be 10 icmp requests sent out and hopefully 10 icmp replies returned.

So a ping will send out (by default) 64 byte packets from Workstation A which would be received by Workstation B. Workstation B would then reply with 64 byte packets and that process would be timed which will indicate the latency.

The third thing you will see that ping provides is a sequence number. A sequence number indicates the sequence that the packets were received in.

As an example, what you can try is:

ping -c 100 192.168.0.2

|

this will send 100 pings instead of just 5 pings.

After hitting enter unplug network cable for 5 seconds or 10 seconds then plug in again. The sequence numbers will start off at 1 and they will increase until such time as you unplug the cable. Let 's say you waited 10 seconds the sequence would get to 10 (at which point you unplugged the cable), you waited say 10 seconds then you plugged it back in. You should see the sequence continue at 20.

This example assumes that ping is sending an echo request every second which is the default frequency request, so you'll see that the sequence indicates that after 10 seconds there were some packets that were lost. How many? About 20.

Finally ping gives you a summary of the response from the remote host. This summary usually appears right at the bottom where it tells you things like your RTT, which is your round trip time, the maximum and the minimum time, the average and the standard deviation as well as telling you about the packet loss.

Now because this is happening at the network layer it would be nice to see what happening one layer below that, at the MAC layer. For this we can use the arp command.

You can do an info on arp to see what options you have.

If you do an arp -a this will show you the full arp table.

If you don't see the MAC address of the workstation that you are trying to ping in arp table then will never be able to ping that MAC address.

In fact, Linux has another nifty command called arping. Arping is used when you get an IP address using DHCP.

So arp will tell us what happens at MAC layer and ping will tell us what happens at network layer and we can use these to troubleshoot our network.

[1] We will talk about Routers and Switches later on.

[2] If you recall your System Administration course, you will remember that almost all everything on Linux/Unix is Client/Server based.

[3] Linux has a nifty program: ipcalc. It allows you to quickly display broadcast addresses, network addresses of other useful information.

Table of Contents

Let 's understand client server technology in networking - why do we have client server technology and what it is?

Essentially in a Unix or Linux environment we'll have a server that will serve applications and client(s) that use the applications or services from the server.

This type of technology enhances performance, where the server can execute some of the processes almost on behalf of the client. The server generally will have more resources at its disposal than the client does, for example, more memory and perhaps more hard disk space. Therefore it makes sense that the server does some of the required processing along with the client, and thereby there are now two processors dealing with a part of that application - we'll come to come examples shortly.

The next reason why client server technology is exceptionally well supported in the Unix/Linux environment is because of scalability.

scalability is a crucial factor because as our networks grow in size, increasing the number of available work-stations, we will need some means of scaling our architecture to handle an increased volume of traffic, perhaps an increased number of clients. So from a scalability perspective, if we put 10 clients on a network and we put 1000 clients on a network, there will be a significant performance difference.

Client server technology allows us to scale these networks up to relatively large client networking farms.

The idea behind client server is that the shared responsibility can be shuffled around, for example, we could choose to run certain applications on specific clients and other applications on other clients.

Let us use X11, the X Windows system in Linux, as a typical example of client server flexibility. We have an X11 service running on the server and running on the client we have the client applications such as X Clock or XI.

We could also choose to run XI on one client and not on another client.

Interoperability is a key factor of why client server technology is so good.

An example of the good use of interoperability is that you may have had a DEC server, a Dec VAX for example, running X 11, the X Windows system. On the client side you have a Unix machine and so the X-server, because it was a standard protocol, could offer it 's services to the Unix workstation.

Another example would be a font server, where we have one or more font servers in the network. Even if the font servers ran on a Dec VAX or on Solaris or an HP Unix machine, X font clients will have access to service. So a Unix or Linux machine would be able to use the font service offered by a VAX machine.

The final reason for the advantages of interoperability I am going to mention here is "central control".

Central control is something that is familiar to those people that were working with Main Frame computers, and in a way it is ‘funny' how IT fashion is coming back on itself as now we have client server technology and this allows us to have central control of, for example, what applications to serve to and what services to serve on a network.

A classic example of client server technology is the Citrix windows client and server technology that we'll see being implemented for Windows clients in a lot of networks today.

So how is this central control implemented?

Well, I think the best way to describe this is to give some more examples.

Sometimes in client server the client and the server reside on the same machine, an example of this is X11, X Windows system, implemented in Linux. In the X Windows system, we would have an X 11 server on top of the Operating System and on top of that we would have client applications.

The client application might be X Clock or say K-Astroids or TUX-Racer. These applications are essentially our client and beneath our client we are running a server.

X is a particularly interesting one because in order to run KAstroid or TuxRacer or X-Clock, we are forced to have a server provide those services. It 's the responsibility of the server to talk to the video card, for example, to explain to the video card how to display a penguin racing down a ice slope

What makes this even more interesting is the fact that because every Unix or Linux system that you are running a GUI on you are going to have an associated X server running.

There is no reason why you couldn't display these aforementioned clients on the server. So in this case we have a machine that's offering itself as the client and we have another machine offering its X11 server as the server. Clearly we could swap those around the crossover line, for example we could run X Clock on machine B but use the services offered by machine A, X 11 server, to display the clock.

Lets have a look at a simpler example with FTP (File Transfer Protocol), in this case we might have access to Internet Solutions that has a repository of all the Linux ISO images that we want to download.

How do we do that?

Well, let us say that on our client 's side we have a client application called GFTP (Gnome File Transfer Protocol) ftp server. This GFTP server is the client and Internet Solutions ftp server, is the server.

We use our client 's to contact the server and this happens on different machines, unless you are running your own ftp server to ftp files from yourself to yourself, which really would make no sense.

This example demonstrates using http or web-based services as client servers.

On the server side we might have an Apache or IIS running as a server and on the client 's side we would have our browser, as in the Firebird, Gallion or Internet Explorer.

In that scenario, we would have an http server, usually running Apache and on the client 's side we would have a browser. Not all processing is done on a server, the server sends a web page and the browser, on receiving that web page, interprets it and displays it in a presentable form.

How is client server technology implemented?

It 's implemented using a tool called RPC (Remote Procedure Call) and it 's well outside the scope of this course.

RPC gives the ability to essentially call an application from another machine remotely.

In programming terms you have an application program interface (API) and the client is able to, in many instances, actually call procedures from the remote machine.

Table of Contents

We now have an understanding of TCP/IP and should have an understanding of client server technology. Let 's now consider how networks actually fit together?

In this chapter we will look at technology and terms such as switches, routers and hubs, Internet, Intranet and LAN 's and WAN's.

Two fundamental concepts in networking are the difference between the logical and the physical network. In covering these two concepts, we'll use an example as follows:

We have a hub or a switch and five workstations and a server connected. Physically, each one of these machines can see one another. In other words they are on the same physical network. If you were to draw that in terms of a wire, they are all plugged into the same physical PC wire.

Because of the structure of Internet Protocol (IP) and because of the fact that we can have different networks, we could take one set of machines on network A, 192.168.0.x and another set of machines of network B, 172.16.4.x.

That means that the two sets of machines are connected physically.

When machines are connected to the same networking backbone (wire), yet are on separate networks, these networks are termed Logical networks. For example you could have 5 machines with the address range on 192.168.0.x and 5 other machines on the address range 172.16.10.x. Although they are connected to the same backbone, they are on separate Logical Networks.

This physical network layout is that they are all connected. The logical network layout is that they are separated because they are on separate IP networks.

In fact workstations, on the 192.168.0 network will be unable to talk in any way to workstations that are on the 172.16.4 network.

How you connect up the network physically, depends on the physical structure of your network. There are a number of different physical structures, for example, Token Ring, FDDI, ATM and Ethernet.

Token Ring networking is quite old and outdated although it 's still used in some IBM sites (it was a IBM developed physical network).

There 's FDDI, which is a Fiber Data Distributed Interface network, and this used to be the fastest network available, running faster than Ethernet and Token Ring. Again it is old technology. There 's ATM (Asynchronous Transfer Mode), which was touted to be the next revolution in networking, never quite materialized in the LAN but did in the WAN.

If we just look at the different topology and we're just going to consider Ethernet and Token Ring at this time.

Token Ring network can be thought of as a ring of machines connected to each other. Each machine has an opportunity to pick up the Token, almost like a baton in a relay race. What would happen is that a machine would pick up the Token and would get allocated a time period with that Token. Once their time is up they will have to put the Token back onto the wire for the next machine to pick up. Only the machine which has the token may send data at that time.

Token Ring as a topology is a far superior topology to Ethernet, the joy about Token Ring, is if you broadcast, there should be very little shouting on the network.

Token Ring however, has taken a back seat and it 's not implemented any longer - for a number of reasons, mostly because of slow micro data as it could only ever run between 4 Mbs and 16 Mbs.

It was also a very much more complex and expensive technology than Ethernet is.

So Token Ring died and people adopted Ethernet as their topology of choice.

Ethernet was or is what 's called a contention-based network. In other words, every machine on the network shares the same piece of wire. Ethernet is just a topology where "he who shouts the loudest gets to be heard".

Ethernet comes in a whole range of flavors. Ethernet, in its Vanilla flavor, or in the original flavor, ran at 10Mbs.

![[Note]](../images/admon/note.png) | Note |

|---|---|

I'm not going to go into exactly how fast that is but you can compare this to a modem that will generally dial up to your ISP at 56Kbps and you can see that 10 Mbs and 56 Kbps is a significant step up in how fast the Internet runs as opposed to a modem. | |

Development continues on Ethernet with technology called fast Ethernet. Fast Ethernet ran at 10 times the speed of normal Ethernet and this certainly looked like it was going to be the fastest technology around (say 100Mbs).

Now there 's Giga bits Ethernet, which runs at 1000 Mbs, 10 times faster than fast Ethernet and certainly this seems to be the technology that corporate companies are implementing around their networks (currently).

I said Ethernet was a contention-based technology and in fact, it's called CSMA/CD or Carrier Sense Multiple Access Collision Detection.

To break this up - Carrier Sense means essentially - I put my ear say to the wire and if I don't sense that there 's anybody transmitting at the time, I jump on the wire, a bit like a railway line. If I don't hear a train coming I hop on the wire and off I go.

Multiple Accesses means there could be another train there, just around the corner that I haven't managed to hear or see. So multiple people access this railway line at the same time and, of course we're going to get a collision. As soon as there is a collision, there 's a ripple effect down the railway line.

Everybody that 's listening to the railway line hears this collision and we all back off for a random amount of time. When we sense the coast is clear, we hop back on the railway line and off we go.

So you can see that it 's not exactly the most efficient way of communicating but it does work and it 's simple.

With this CSMA/CD we have what 's known as the MTU (Maximum Transmission Unit).

To continue our previous analogy with the train, the MTU is how long the train can be.

There 's a maximum size that our train can be when we stick it on the railway line. The maximum length a train could be in Ethernet is 1500 bytes and that 's the maximum transmission unit.

If you run the ifconfig command on the command line (logged in as root), you'll see there'll be an entry there called MTU in capital letters and that will be set to 1500 which is the standard size of a Ethernet train.

Now we've seen from the TCP/IP stack, that at the bottom there, we'll have a physical layer and this is what we are now referring to as Ethernet.

![[Note]](../images/admon/note.png) | Note |

|---|---|

Of course if you have Token Ring then the physical layer will be Token Ring. | |

Above that, we have Media Access Control layer, the MAC layer and in fact any 2 servers or workstations, can only talk to one another via the MAC address. They should be using the same physical connection. (Not strictly true but we'll cover that just now.)

In other words, 2 workstations or a server workstation or 2 clients want to talk to one another, they can only talk to one another using their MAC address.

You can use the "ifconfig" command to determine what the MAC address of your NICs are.

You cannot talk between two machines if they contain the same MAC address. It would be like the postman trying to deliver mail to No. 21 in the same street where 2 houses, 3 houses have the same No. 21. Clearly the postman's not going to know which one to deliver to.

Every Network Interface Card on the network needs a unique MAC address and these are supplied by the manufacturers of the NIC.

The MAC address is broken down into 2 by 6 byte numbers. The first set of 6 bytes is the manufacturer 's identifier and the remaining 6 bytes is the unique identifier given to this particular network interface card by that manufacturer. There are hundred of manufacturers of network interface devices around the world.

To find a out the MAC address on the workstation you are on you can type:

ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:00:86:3C:A3:0A

inet addr:192.168.2.233 Bcast:192.168.2.255 Mask:255.255.255.0

inet6 addr: fe80::200:86ff:fe3c:a30a/64 Scope:Link

UP BROADCAST NOTRAILERS RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13655 errors:0 dropped:0 overruns:0 frame:0

TX packets:5502 errors:0 dropped:0 overruns:0 carrier:0

collisions:6 txqueuelen:1000

RX bytes:7570021 (7.2 Mb) TX bytes:632146 (617.3 Kb)

Interrupt:3 Base address:0x300

|

The MAC address is the hexadecimal number after HWaddr. As in: HWaddr 00:00:86:3C:A3:0A

In our CSMA/CD model we used a couple of terms that we need to define further and the first term is to "BROADCAST packets".

Broadcasts are really exactly what the name implies. It 's a broadcast - for example, KFM Radio is broadcast radio, it doesn't know who is receiving the signal but the radio station still broadcasts the signal. If you turn your radio on, you hear it. If you turn it off, you don't.

Broadcast packets are similar in that a workstation, server or network interface card will broadcast to every workstation on that network, and part of the Ethernet 's job is that if it hears a broadcast, it must hear that broadcast.

The next concept is the concept of "UNICAST".

UNICAST 's are packets where one workstation is talking directly to another. If you are going to have a conversation on the telephone, you're having the UNICAST conversation. Well, provided it's not a conference call.

You're having a UNICAST conversation because you are talking directly to the person on the opposite side.

The final term we used is "MULTICAST".

That would be the equivalent to a conference call as mentioned above where there are a number of recipients who are tuned into that conversation.

Essentially, the distinction between these is the fact that Broadcasts are very noisy, in other words, when I start broadcasting, I hold the loud hailer up and I start calling the odds. Nobody has got any opportunity to talk back to me.

When I have a UNICAST or MULTICAST conversation, it 's a conversation, I'm talking to a client(s), and the client(s) is talking to me.

The same happens on a network and let 's look at a couple of examples.

UNICAST 's are where we secure a shell or ftp into a particular client or server - we are then having a one on one conversation on that server.

BROADCAST 's on the other hand, might be things like DHCP (Dynamic Host Configuration Protocol), ARP and BOOTP. With DHCP for example, the client needs an IP address and it broadcasts a request to the network saying, "Please, I need and IP address, somebody help me." It 's up the server to respond. Broadcasted messages are heard by all workstations. Although everybody receives the broadcast, only the DHCP server actually responds.

Broadcast services are noisy. That is, the more broadcast services you have on your network, the less opportunity you have of talking in a UNICAST manner - between client and client, client and server or server and server.