Copyright © 2004 The Shuttleworth Foundation

Unless otherwise expressly stated, all original material of whatever nature created by the contributors of the Learn Linux community, is licensed under the Creative Commons license Attribution-ShareAlike 2.0.

What follows is a copy of the "human-readable summary" of this document. The Legal Code (full license) may be read here.

You are free:

to copy, distribute, display, and perform the work

to make derivative works

to make commercial use of the work

Under the following conditions:

Attribution. You must give the original author credit.

Attribution. You must give the original author credit.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

For any reuse or distribution, you must make clear to others the license terms of this work.

Any of these conditions can be waived if you get permission from the copyright holder.

Your fair use and other rights are in no way affected by the above.

This is a human-readable summary of the Legal Code (the full license).

2005-01-25 21:24:22

| Revision History | |

|---|---|

| Revision 0.0.1 | 01/NOV/2004 |

| Initial version | |

Table of Contents

- 1. Apache

- 2. Berkley Internet Name Daemons (BIND)

- Introduction

- Our sample network

- A review of some theory on domains and sub-domains

- DNS - Administration and delegation

- Name resolution

- Caching replies

- Reverse queries

- Masters and slaves

- Configuration of the Master Server

- The named configuration file

- Error messages

- Starting the DNS Server

- Troubleshooting zone and configuration files

- Configuring your resolver (revisited)

- The nameserver directive

- The search directive

- The domain directive

- The sortlist directive

- Master server shortcuts

- The $ORIGIN directive

- The @ directive

- The $TTL directive

- Configuring the Slave Name Server

- Research Resources:

- 3. The SQUID proxy server

- 4. Configuration of Exim or Sendmail Mail Transfer Agents

- Index

List of Figures

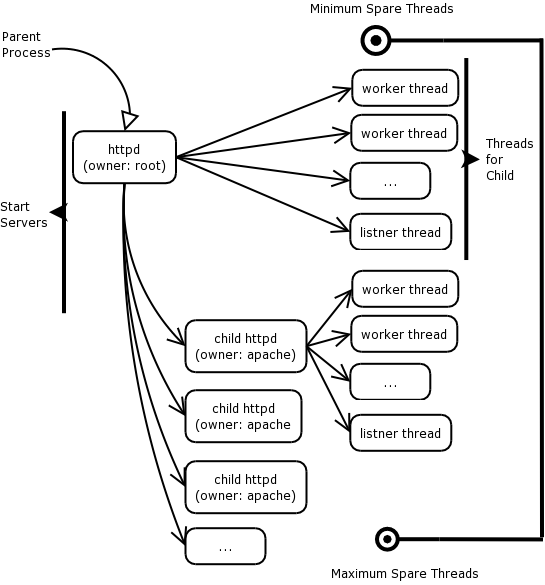

- 1.1. The Apache Worker Module

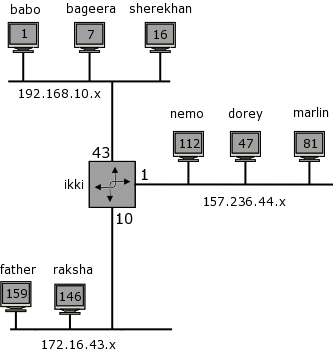

- 2.1. Our sample network

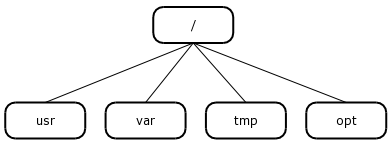

- 2.2. Unix Filesystem hierarchy

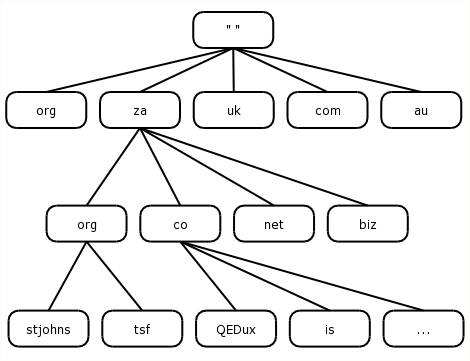

- 2.3. Domain name hierarchy

- 2.4. Domains

- 2.5. gov.za zones

- 2.6. Reverse Map

- 2.7. The Sample Network

- 2.8. SMTP Process

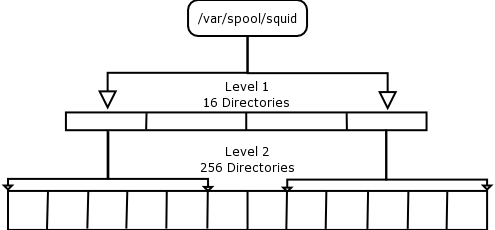

- 3.1. Squid Directory Levels

Table of Contents

Apache is a web server, that has it's roots in the CERN web server, but naturally has come a long way since those early days. It is the most widely used web server on the Internet today, and it is unlikely that much will topple it from that position in the near future. It's widespread use can, in some part, be attributed to the ease with which it can be integrated with content technologies like Zope, databases like MySQL and PostgreSQL and others (including Oracle and DB2) and the speed and versatility offered by Web rapid application development (RAD) languages like Personal Home Page (PHP). It is highly configurable, flexible and most importantly, it is open. This had lead to a host of development support on and around Apache. External modules such as mod_rewrite, mod_perl and mod_php have added fist-fulls of functionality as well as improved the speed with which these requests can be serviced. It has, in no small part, played a role in the acceptance of the Linux platform in corporate organizations.

In this module, we will learn how to configure and optimize Apache to serve web pages - it's primary function (although by no means it's only use). We will also learn how to write some simple CGI scripts for use in generating interactive web pages. But enough. Let's dive right in.

The world wide web runs on a protocol known as http or the hypertext transport protocol. This was first developed by Tim Berners-Lee in 1989. His initial idea was to provide a mechanism of linking similar information from disparate sources. Similar in concept to the way your mind might work. Http is the protocol that is the most utilized on the Internet today. It is still part of the TCP/IP protocol stack, just the same as SMTP, ICMP and others, but's it's responsibility is transferring data (web pages) around the Internet.

Apache comes in two basic flavors: Apache version 1.3.x and version 2.x. Since the configuration of these two differ quite substantially in some places, we will focus this course on the configuration of version 2. Should you still be running version 1.3, we suggest you upgrade. You'll need to do it at some point, so the sooner the better (IMHO). If you can't, most of this course will apply to you, but your mileage may vary somewhat.

The Apache web server has been designed to be used in either a modular or non-modular way. In the former, modules are compiled separately from the core Apache server, and loaded dynamically as they are needed. This, as you can imagine, is very useful as it keeps the core software to a minimum, thereby using less operating system resources. The downsides of course are that the initial startup of the server is a little slower, and the overhead in loading a module adds to the latency in offering web pages. The latter means of compiling Apache is to include all the "modules" in the core code. This means that Apache has a bigger "footprint", but the latency overhead is down to the bare minimum. Which means you use is based upon personal choice and load on you web server. Generally though, when you unpack an Apache that has been pre-compiled (i.e. It's already in a .deb or .rpm package format), it is compiled to be modular. This is how we'll use it for this course.

The core Apache server is configured using one text configuration file - httpd.conf. This usually resides in /etc/httpd, but may be elsewhere depending on your distribution. The httpd.conf file is fairly well documented, however there are additional documentation with Apache that is an excellent resource to keep handy.

The server has 3 sections to the configuration file:

The global configuration settings

The main server configuration settings

The virtual hosts

In this part of the configuration file, the settings affect the overall operation of the server. Setting such as the minimum number of servers to start, the maximum number of servers to start, the server root directory and what port to listen on for http requests (the default port is 80, although you may make this whatever you wish).

The majority of the server configuration happens within this section of the file. This is where we specify the DocumentRoot, the place we put our web pages that we want served to the public. This is where permissions for accessing the directories are defined and where authentication is configured.

Hosting of many sites does not require many servers. Apache has the ability to divide it's time by offering web pages for different web sites. The web site www.QEDux.co.za, is hosted on the same web server as www.hamishwhittal.org.za. Apache is operating as a virtual host - it's offering two sites from a single server. We'' configure some virtual hosts later in the course.

So, let's begin by configuring a simple web server, creating a web page or two and build on our knowledge from there.

In it's simplest form, Apache is quite easy to configure. Freshly unwrapped out of the plastic, it is almost configured. Just a couple of tweaks and we're off.

Directives are keywords that are used in the configuration file. The listen directive for example would tell the server what port it will be listening on.

Listen 80

|

Will listen on port 80, the default web server port. Directives are case insensitive, so "Listen" and "listen" will be the same directive.

This is the email address of the administrator of this web server. Generally, web administrators use some generic name such as webmaster@QEDux.co.za, rather than their own name. I suggest you follow that convention. How do you get this email relayed to your personal email address? Create an alias on the mail server (which is covered in the configuration of the MTA).

In my case, I've set mine as follows:

ServerAdmin webmistress@QEDux.co.za

|

Finally, to get us going, we need to verify our DocumentRoot directive. This dictates where out actual .html pages will reside. The DocumentRoot on my machine is:

DocumentRoot /var/www/html

|

Since this where the html files reside, you will need to put a file in this directory called index.html. The index.html file is attached to this course and is called index-first.html. Not only will you will need to copy it to your DocumentRoot directory but you will also be required to rename it to index.html. When a client enters the URL in their browser as follows:

http://www.QEDux.co.za/

|

they expect to get the index.html page in return. The web server in turn, appends the "/" to the end of the DocumentRoot in order to find the page in question. This, in essence is similar to a chroot in Linux. When you chroot, you "hide" the real path to the file system, presenting the user with what looks like the root directory. Here the same applies. You would not want your clients to be able to see that the real directory lives in /var/www/html, they should just see it a "/"

Starting Apache can be done from the command line. However before you jump in boots and all, it might be wise to run your httpd.conf file through the syntax checker. Start by getting help on the httpd options:

httpd -?

|

Once you've scanned the options (and of course read the man page for httpd ;-), you can run the syntax checker using the switch:

httpd -t

|

which should tell you whether you've made any glaring errors in the httpd.conf file. If not, we're in business. Now run:

httpd

|

This will pause briefly on the command line and then return you to the prompt. Now, it is worth looking at the httpd process BEFORE looking at the web page (index.html) you copied to the DocumentRoot earlier. To do this type:

ps -ef|grep httpd

|

In my case, I see:

root 1983 1 2 22:36 ? 00:00:00 httpd

|

Now, using a text browser like links or lynx, type the following command:

lynx http://your-IP-address/index.html

|

Bingo, the page is served to you. If you don't have lynx or links (both text based browsers) then fire up your old trusty GUI browser and type in the address.

Now, we need to look back at the httpd processes running on your system after you've retrieved the web page. Again type:

ps -ef|grep httpd

|

Now, in addition to the one server owned by root, another stack of servers are running, all being owned (in my case) by Apache.

apache 1791 1788 0 10:44 ? 00:00:00 /usr/sbin/httpd

apache 1792 1788 0 10:44 ? 00:00:00 /usr/sbin/httpd

......

apache 1798 1788 0 10:44 ? 00:00:00 /usr/sbin/httpd

|

Why is this? Why are there many servers started when you purposely only started one?

Apache has been designed to run using child processes and threading. In essence, these features make it possible for Apache to service incoming client requests in a timely and efficient manner. It is important to understand the difference between threading and child processes. While this is not essential to the basic configuration of Apache it will help you understand how Apache is servicing the client requests.

Apache uses two sets of modules to address these modes of operation, namely:

the worker module for the threading and

the prefork module for the handling of the child process

The diagram below is a schematic showing the worker module in action.

It works as follows:

The following description should be read in conjunction with the diagram above. The root user starts the initial process, in order that Apache can use a low numbered port (80). Thereafter, this root-owned process forks a number of child processes. Each child process starts a number of threads (ThreadsPerChild directive) to answer client requests. Child processes are started or killed in order to keep the number of threads within the boundaries specified in MinSpareThreads and MaxSpareThreads. Every child forks a single listener thread which will accept incoming requests and forward them to a worker thread to be answered. Other parameters used in the worker.c directive are:

MaxClients: This is the maximum number of clients that can be served by all processes and threads. Note that the maximum active child processes is obtained by:

MaxClients / ThreadsPerChild

|

ServerLimit is the hard limit of the number of active child processes. This should always be greater than:

MaxClients / ThreadsPerChild

|

Finally, ThreadLimit is the hard limit of the number of threads. This should also be larger than ThreadsPerChild

All this is bounded by the IfModule worker.c </IfModule> directives. On the whole, you probably will not need to modify these settings, but at least you know what they are for now.

Handling requests using the prefork module is similar in many respects to the worker module, the main difference being that this is not a threading modules, meaning that threads do not answer the client http requests, the child processes themselves do. Again, root starts the initial httpd process in order to bind to a low-numbered port.

The startup process then starts a number of child processes (StartServers) to answer incoming requests. Since children in the prefork module do not use threads, they have to answer the requests themselves. Apache will regulate the number of servers. MinSpareServers is the minimum number of spare servers that will be running at all times, with the upper limit bounded by MaxSpareServers. The MaxClients will be the maximum number of client requests that may be answered at one time. Finally, the MaxRequestsPerChild is the number of requests that a single child process can handle. All these are configurable within the IfModuleprefork.c</IfModule> directives, however the defaults will probably suite you for the purpose of this course and probably for a server in the office too.

By default, web servers will mostly listen on port 80. While this is not a prerequisite, the browsers almost without fail will try to connect to a web server on port 80. So, the two need to co-encide - naturally. Linux ports below 1024 are considered well-known and only root can bind to these ports from the operating systems level. Hence the need to start Apache as root, and thereafter switching to an Apache (or other) user. As of Apache 2, a port must be specified within the configuration file as defined by the Listen directive. By default, Apache will offer web pages on every IP address on the server on this port. In some instances, this may not be the desired operation. Thus the Listen directive can also take an IP address and a port number as follows:

Listen 10.0.0.2:1080

Listen 192.168.1.144:80

|

Here the server contains two host addresses each answering http requests on different ports.

As mentioned earlier, Apache can be used as a monolithic server (all modules required are compiled into the executable), or in a modular fashion using dynamic shared objects (DSO's). The latter is the preferred means of using Apache as the server has a smaller memory footprint. we'll discuss the DSO method of configuring and using Apache, and leave it up to you, the learner, to read the documentation if you wish to do anything different from this.

Modules are loaded into the core server using the mod_so module. In order to see what modules are compiled into Apache running on your machine, you can issue the command (on the command line):

httpd -l

|

On my machine, this command returns:

linux:/ # httpd -l

Compiled-in modules:

core.c

prefork.c

http_core.c

mod_so.c

|

Clearly, from our discussion earlier on worker versus prefork modules, my Apache will use the prefork directives. Also, in order for Apache to be able to load modules as required, it needs the mod_so compiled in too. If it was not compiled in, it would be unable to load itself, never mind any other modules!

The LoadModule directive will load the associated module when needed. The syntax for this directive is straight-forward.

LoadModule foo_module modules/foo_mod.so

|

This will load foo_module from the file in the modules directory called foo_mod.so.

Once this is configured, there's little else to do. There are however, configuration files that may be associated with each module. PHP for example has a configuration file to specify run-time configuration directives. Configuration files usually reside in the same (or similar) place as your httpd.conf file described earlier. On my system, these files reside in:

/etc/httpd/conf.d/

|

Included below is the php.conf file from this directory.

#

# PHP is an HTML-embedded scripting language which attempts to

# make it easy for developers to write dynamically generated

# webpages.

#

LoadModule php4_module modules/libphp4.so

#

# Cause the PHP interpreter handle files with a .php extension.

#

<Files *.php>

SetOutputFilter PHP

SetInputFilter PHP

LimitRequestBody 524288

</Files>

#

# Add index.php to the list of files that will be served

# as directory indexes.

#

DirectoryIndex index.php

|

This configuration file is "appended" to the httpd.conf file when the mod_php.so is loaded. You may notice that the structure of this file looks very similar to the Apache configuration file - for good reason.

Documentation of the modules is generally shipped with the Apache manual, which will reside on the default web site on the web server. In my case, my default DocumentRoot is /var/www/html, so the manual for Apache resides in /var/www/manual, and the module manuals reside in /var/www/manual/mod/.

We'll cover the use of some of these modules during the course. For now though, accept that the modules can be used for a variety of purposes.

As mentioned earlier, Apache will run as root on startup (if you want to use low-numbered ports). Once this is done though, the server will start child process as the user/group specified in the server configuration file. The User and Group directives specify this.

User <your Apache user here>

Group <your Apache group here>

|

![[Note]](../images/admon/note.png) | Note |

|---|---|

Starting child processes as this user will have an effect on your CGI scripts running later. We'll cover CGI and a program called suexec later in the course, but it is worth remembering this fact. | |

Often a server may not be named the same as the host you wish your users to refer to it as. For example, my server in my office is called ipenguini.QEDux.co.za, while I wish users wanting to contact my web site to use www.QEDux.co.za. Since these are in fact the same server (using the CNAME Internet Resource Record in the DNS), I would put my ServerName as www.QEDux.co.za.

This directive is essential to the running of Apache. It dictates where the contents on the web site will reside. Assume that users wish to contact my web site www.QEDux.co.za. They will type in the following URL:

http://www.QEDux.co.za/index.html

|

Clearly, they could have left out the index.html, but I have put it in here for a reason. Now, presumably, your index.html file does not reside in the root directory. In my case it lives in:

/var/www/QEDux.co.za/html

|

So my DocumentRoot directory is set to:

DocumentRoot /var/www/QEDux.co.za/html

|

The DocumentRoot directive translates in the URL to the "Apache root directory /", hence the "/" on the end of the URL

http://www.QEDux.co.za/

|

We wouldn't want users to type in:

http://www.QEDux.co.za/var/www/QEDux.co.za/html/index.html

|

Apart from this presenting a security problem, it would confuse the users no end.

In sum then, the DocumentRoot directory specifies the point of departure for the index.html page and the rest of the web site. In essence, it "hides" the /var/www/QEDux.co.za/html, parading it as "/" to the user.

OK. With all this new-found knowledge, you're wanting to get cracking with your new web server, but wait. There's more. While we have defined the DocumentRoot and the ServerName, we still need to define what operations can be performed in directories within the DocumentRoot.

In order to do this, Apache has "container" blocks. These blocks are very XML-ish in nature. For example, there is a Directory container, that contains information about a particular directory. The Directory container is closed using a </Directory> container directive. Other containers include DirectoryMatch, Files, FilesMatch, etc. The general format of a container is:

<Directory>

Some options and directives go here

Some more options and directives

</Directory>

|

By default, we need to specify a really secure set of directives for the root ("/") directory. [1]

<Directory />

Options FollowSymLinks

AllowOverride None

</Directory>

|

Without going in to too much detail here (it will be covered later), we allow users to follow symbolic links from the root directory, but allow no other options to override the options in this container. Options can be overridden using a .htaccess file (described later) if the AllowOverride was set to "All".

Once we've set a very restrictive set of permission for the root directory, we set up the permission for the DocumentRoot directory.

<Directory "/var/www/QEDux.co.za/html">

Options Indexes FollowSymLinks

AllowOverride None

Order allow,deny

Allow from all

</Directory>

|

Here, the options are a little more relaxed. First, we allow users to follow symbolic links. Also, if they happen upon a directory without an index.html or default.html file, they will obtain an index of the files in the directory - in a similar way you get an index of your directory if you type:

file:///var/log

|

into your browser window. We still disallow any directives set in a .htaccess file from overriding the directives set in this container, and finally we set access to this directory. In this example, we will check the Allow list, and then the deny list of whether this user can gain access. This may be particularly helpful when restricting web sites to say, the help desk operators, or the call-centre people. In the example, we first check the allow list (which admits everyone) and then the deny list (which is absent here), and allow or deny people accordingly.

After setting your Directory container symbol (adjust to your DocumentRoot), you are ready to start your web server. In order to do this, you will probably want to create a default web page. I have included one for download with this course - index-first.html. Rename it to index.html and put it in your DocumentRoot directory. Point your browser at your machine and voila, you should see the web page.

This is an example of the directory container for the "cgi-bin" directory. Note how the directory is specified.

<Directory "/srv/www/cgi-bin">

AllowOverride None

Options +ExecCGI -Includes

Order allow,deny

Allow from all

</Directory>

|

![[Note]](../images/admon/note.png) | Note | |

|---|---|---|

In order to use your machine name rather than your IP address in the URL, you will have to modify your hosts file to contain the FQDN of your hosts. My host for example is defender.QEDux.co.za, so I put www.QEDux.co.za as an alias in my hosts file as follows:

| ||

Now that you have got the basic thing going, it's time to return to those options in the containers. Options are controlled using a "+" or a "-" in front of the option. No sign preceding an option is taken to mean a "+". In our preceding examples, the option FollowSymLinks was not preceded by a "+", but the "+" was implied. A subtlety here is that if the option is preceded by a "+", the option will be added to any previous options as laid out elsewhere. So the options may be:

All: Allow all options, all that is except MultiViews.

FollowSymLinks: Follow symbolic links from this directory, even if the target directory does not actually reside in the same DocumentRoot directory.

SymLinksIfOwnerMatch: Follow the symbolic links, but only if the owner of the symbolic links matches the owner of the directory in which the file currently resides.

ExecCGI: Allow execution of CGI scripts in this directory

Includes: Allow server side includes. SSI will be covered in more detail later in the course.

Indexes: If the directory in which the user finds themselves does not contain any index.html or default.html (as specified by the directive DirectoryIndex), then a listing (probably with pretty icons) will be displayed.

MultiViews: This is a little more complex. Web sites can be tailored in a number of ways. They could offer your website in Xhosa for example, and the users home language would be selected automatically depending on their browser preferences. My index page may be index.xh.html as well as index.en.html. Now when a native English speaker views my site (with their browser configured appropriately), they will see the English page, while when the native Xhosa speaker views the site (browser configured correctly again), they will see the Xhosa version. Allowing MultiViews makes this possible. Language support is not the only thing available in MultiViews. Text/html versus text only, jpg or gif versus png's can also be selected based upon priorities.

The example below, taken from the Apache manual shows that text/html is preferred over straight text and gif's and jpg's are preferred over all other image types.

Accept: text/html; q=1.0, text/*; q=0.8, image/gif; q=0.6,

image/jpeg; q=0.6, image/*; q=0.5, */*; q=0.1

|

Since MultiViews are a directory based directive, they need to be enabled using the .htaccess files in a directory in order to take effect (see a description of the .htaccess file below).

let's return now to the AllowOverride directive.

The AllowOverride directive is only allowed in a Directory container.

As mentioned earlier when discussing the "/" (root) directory, when this directive is set to "None" the .htaccess files are completely ignored.

When set to "All" the directives in the .htaccess files are allowed based upon their "Context".

![[Note]](../images/admon/note.png)

Note Context refers to the context (or containers) in which directives are allowed or denied. In some contexts, directives are not allowed due to possible breaches of security, or because they don't make much sense in the context

Other override directives include:

authentication directives (AuthConfig - see mod_auth for more information),

file information directives (FileInfo - see mod_mime for more information), directory indexing (Indexes - see mod_autoindex for more information),

limitation of access to the web site (Limit - see mod_access for more information) or,

any of the options described above - provided of course they make sense in the context of the container (Options)

Using these options in, say, a .htaccess file in some web directory will allow us to limit access to that directory to individuals in a certain subnet, or show the indexes in a particular manner, etc.

The final piece of our container pie above relates to access to this page or site. This all falls under the mod_access module. mod_access is responsible for controlling access to the page/site based upon one, or a number of criteria:

Clients hostname

Clients IP address and/or subnet

Environmental variables being set

The last of these is too complex for this course and will not be discussed now. The other two are relatively easy.

We may set an allow/deny directive as follows:

Allow from all

|

which will naturally allow for access from all clients. Alternatively:

Allow from 172.16.1.0/24

|

will allow only users on the network 172.16.1.0 to connect to the web site. Others examples:

Allow from QEDux.co.za

|

Only allow clients to connect from the domain QEDux.co.za.

Allow from \

172.16.1.1 ipenguini.QEDux.co.za 192.168.10.0/255.255.255.0

|

Only allow clients on the 192.168.10.x network to connect, as well as the hosts ipenguini.QEDux.co.za and 172.16.1.1.

The deny rules work identically. The only outstanding thing is to determine the order in which these rules are applied. For that the Order directive is used - simply indicating the order in which the allow and deny directives are applied.

Assuming I have the directives:

Allow from \

172.16.1.1 ipenguini.QEDux.co.za 192.168.10.0/255.255.255.0

Deny from all

Order Allow,Deny

|

Once a match is made, no further checking is done. So, had I switched the Order directive to:

Order Deny,Allow

|

Then even the poor souls that appear in the allow directive would not be allowed through as the deny is denying everyone at the outset.

Apache is able to serve a number of different web-sites from the same machine. The way this is achieved is through the use of virtual hosts in the configuration file. It should be noted that if even a single virtual host is described, all containers outside the VirtualHosts container will be ignored. Thus, if you set up even a single virtual host, then your original web site must fall within the VirtualHosts container too.

There are two types of virtual hosts:

name based virtual hosts and,

IP based virtual hosts.

IP based virtual hosts require separate IP addresses in order to run, while name-based ones run off the same IP address, but are called different things (perhaps gadgets.co.za and widgets.co.za). We will look at name-based ones in this course. For IP based ones, consult the Apache documentation.

The first directive required is the "NameVirtualHost". This directive is used by the hosts to be associated with a single IP address. Remember, in name-based virtual hosts, there may be multiple names on a single IP address. Within the VirtualHosts container, we can include all the directives we used without virtual hosts.

An example is included below:

#the widgets website

NameVirtualHost 192.168.0.211

<VirtualHost 192.168.0.211>

ServerAdmin webmaster@widgets.co.za

DocumentRoot /var/www/widgets.co.za/html

ServerName www.QEDux.co.za

ErrorLog logs/www.widgets.co.za-error_log

CustomLog logs/www.widgets.co.za-access_log common

<Directory "/var/www/widgets.co.za/html">

Options Indexes +Includes FollowSymLinks

AllowOverride None

Order allow,deny

Allow from all

</Directory>

Alias /icons/ "/var/www/widgets.co.za/icons/"

<Directory "/var/www/widgets.co.za/icons">

Options Indexes MultiViews

AllowOverride None

Order allow,deny

Allow from all

</Directory>

ScriptAlias /cgi-bin/ "/var/www/widgets.co.za/ \

cgi-bin/"

#

# "/var/www/cgi-bin" should be changed to

# whatever your ScriptAliased

# CGI directory exists, if you have that configured.

#

<Directory "/var/www/widgets.co.za/cgi-bin">

AllowOverride None

Options None

Order allow,deny

Allow from all

</Directory>

</VirtualHost>

# The gadgets web site.

<VirtualHost 192.168.0.211>

ServerAdmin webmaster@gadgets.co.za

DocumentRoot /var/www/gadgets.co.za/html

ServerName www.QEDux.co.za

ErrorLog logs/www.gadgets.co.za-error_log

CustomLog logs/www.gadgets.co.za-access_log common

<Directory "/var/www/gadgets.co.za/html">

Options Indexes +Includes FollowSymLinks

AllowOverride None

Order allow,deny

Allow from all

</Directory>

Alias /icons/ "/var/www/gadgets.co.za/icons/"

<Directory "/var/www/gadgets.co.za/icons">

Options Indexes MultiViews

AllowOverride None

Order allow,deny

Allow from all

</Directory>

ScriptAlias /cgi-bin/ "/var/www/gadgets.co.za/cgi-bin/"

#

# "/var/www/cgi-bin" should be changed to

# whatever your ScriptAliased

# CGI directory exists, if you have that configured.

#

<Directory "/var/www/gadgets.co.za/cgi-bin">

AllowOverride None

Options None

Order allow,deny

Allow from all

</Directory>

</VirtualHost>

|

As you can see, the widgets and the gadgets web sites are hosted on the same web server. A client pointing their browser to widgets.co.za would be directed to the widgets web site, while the gadgets.co.za This is virtual hosting. It can get more complex than this, but for now, this is adequate. Obviously the more virtual hosts you require, the more VirtualHosts containers you will require too.

CGI is handled in Apache using a program called suexec. This program is used to run applications from within Apache. Naturally, this is a fairly dangerous application to allow anyone access to use. A simple CGI script, with malicious intent can wreak havoc on you system. It is wise to keep tight control over the CGI that is allowed to run on your system. CGI is quite simple really. It is a piece of source code (such as perl, C, Java, etc.) that runs, and creates output that in HTML format. let's look at a simple CGI program using the shell:

#!/bin/bash

echo "Content-type: text/html\n\n"

echo "<HTML>\n"

echo "<HEAD><TITLE>My First CGI Script</TITLE></HEAD>\n"

echo "<BODY> \

<H1>About my first automatically generated HTML code \

</H1>\n"

echo "<HR/>\n"

echo "This is a really cool way to generate HTML<br/>"

print "</BODY></HTML>\n"

exit (0)

|

Putting this in a file, changing it's mode, and then pointing your web browser to it will cause it to run. The output is sent back to the web browser. CGI gets pretty complex and this is by no means a CGI tutorial, but simple CGI can be very effective in delivering information to the user.

A directive "ScriptAlias" indicates where CGI scripts can be placed in the directory hierarchy. In the case of the example below, the scripts are placed in the /var/www/widgets.co.za/cgi-bin directory.

ScriptAlias /cgi-bin/ "/var/www/widgets.co.za/cgi-bin/"

<Directory "/var/www/widgets.co.za/cgi-bin">

AllowOverride None

Options None

Order allow,deny

Allow from all

</Directory>

|

Scripts placed anywhere else will simply not be allowed to be run - for obvious reasons. While CGI is still in wide use, a number of new languages have come of age. Specifically Personal Home Page (PHP) has become a very popular web authoring tool.

There is so much configuration that can be done to Apache, and taking some time to consider these options certainly does not cover it in any significant detail, but it is a start.

[1] don't get confused between the DocumentRoot and the real root directory. Here, we are referring to the real file system root directory.

Table of Contents

- Introduction

- Our sample network

- A review of some theory on domains and sub-domains

- DNS - Administration and delegation

- Name resolution

- Caching replies

- Reverse queries

- Masters and slaves

- Configuration of the Master Server

- The named configuration file

- Error messages

- Starting the DNS Server

- Troubleshooting zone and configuration files

- Configuring your resolver (revisited)

- The nameserver directive

- The search directive

- The domain directive

- The sortlist directive

- Master server shortcuts

- The $ORIGIN directive

- The @ directive

- The $TTL directive

- Configuring the Slave Name Server

- Research Resources:

This module will walk you through the configuration of a DNS name server. In the Linux world, DNS is generally maintained by the BIND software, which is currently in it's 9th revision. There are, of course other DNS server software out there, but since almost the entire Internet uses BIND, and it has been the most long-serving DNS server, we will focus on BIND in this course.[2]

Before we being diving into configuring BIND, we need to review a couple of topics that you may or may not know about. We will also need to sketch the setup of our private network to make understanding the structure of our DNS simpler.

In your class, (or even if you're studying this course at home) you may not have all the machines listed in this sample network layout. don't worry. Neither did I. You see, getting something listed in the DNS does not necessarily mean the machine has to be present physically, since DNS is merely a service that will translate IP addresses to names or visa versa.

There are many domains on the Internet that have been reserved, but are currently not in use. In this module, we're going to create a fictitious domain, and while we're about it, we'll create a couple of fictitious machines too. The minimum number of machines you will need to get this course to work is 2, which is the minimum required in the course specification.

Figure 2.1 below illustrates the proposed configuration of our sample network. You should see that some of the hosts are multi-homed. Multihomed hosts are those that are connected to two networks simultaneously.

The Internet is comprised of domains, organized into a hierarchical structure. At the top of this hierarchy is the root domain. In design, the domain hierarchy is similar to the UNIX/Linux file system structure.

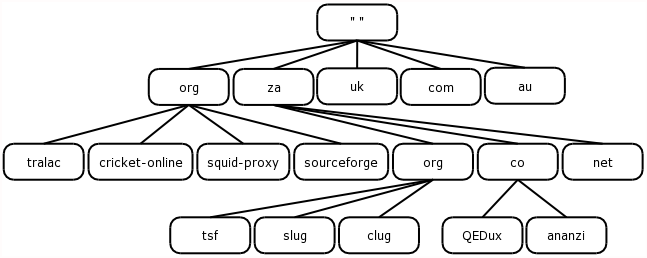

Figure 2.2 illustrates a typical UNIX file system structure, while Figure 2.3 shows a comparative diagram of the domain name hierarchy.

As you may have guessed, a domain name is written from most specific part to least specific part. Thus, the domain QEDux.co.za has a host called www, and another called FTP, while the domain co.za had many sub-domains, of which QEDux is one.

Another may be pumphaus.co.za. So as we read the domain from left to right, we read from most specific to least specific, "za" being much less specific about a host than "www".

What is often not shown in a fully qualified domain name (FQDN) is the root node. Why?

Well the root node is actually "" (null) and the separator is the full-stop ( . ), so FQDN's should actually be written as:

"www"."QEDux"."co"."za".""

|

![[Note]](../images/admon/note.png) | Note |

|---|---|

Where I have included the quotes ("") purely as a means of showing the root node on the end. We would not really write a FQDN like this. It would instead be written as: www.QEDux.co.za | |

Notice that the trailing dot is dropped, as the root node is not shown.

Within our domain hierarchy, sibling nodes MUST have unique names. Take the "org" domain for example, at tier one of the domain hierarchy, there is an "org" sub-domain and some examples may include "tralac.org", "glug.org", while within the "za" sub-domain, we can once again have the "org" domain.

This time however, the sub-domain falls within the "za" domain, making "tsf.org.za" a distinctly different domain from "tsf.org". Similarly, "tsf.org.za" would be unable to have two sub-domains within their domain called "slug".

If one were to extrapolate back to the analogy of the UNIX/Linux file system, we are able to have two "sbin" directories, however one "hangs off" /usr, while the other "hangs off" the root directory ( / ).

To flog this horse, there are MANY hosts on the Internet, which have the name "www", but because they are all contained within their domains, our packets traversing the network will know which one we mean to make contact with.

OK, we have now reviewed domains and sub-domains, next we will deal with how to administer DNS?

Is there some person responsible for the Internet DNS as a whole? No. There is an organization that is responsible for administering the use of domain names (and IP addresses too), but they could not possibly be responsible to maintaining the DNS for the entire Internet.

Apart from being a completely unmanageable job, it would also be very prone to breaking due to this central point of failure.

As a result, there is a system of delegation. In much the same way that all the computers may belong to your school, or company, while only a couple of machines are delegated as your responsibility, DNS is controlled by ICANN (Internet Corporation for Assigned Names and Numbers), and authority for your domain may well be assigned to your organization, ICANN having little to do with it's administration.

In order to manage the DNS hierarchy, ICANN delegate responsibility for your domain to another authority. This may be your organization, or, if you're too small or lack the technical expertise, it may be your Internet Service Provider (ISP). In my case, for example, my ISP administers my DNS, not because I lack the technical know-how, but due to the small number of hosts I have on my network, it would be more effort than it would be worth.

let's look at an example. Supposing my company were large and I control my DNS, I could subdivide my domain further. Perhaps I want to add two new sub-domains. Since sales people never listen to technical people ;-), I decide that I would want two new domains:

sales.QEDux.co.za and

tech.QEDux.co.za

|

Now we could have two web servers serving information relating to the sales department and the technical department.

Each server could be called "www", without fear of a clash of host names.

Since we have authority over this domain, we can simply configure our DNS to handle the new sub-domains without having to contact ICANN again.

This raises an important but subtle issue: that of domains and zones. We are learning in this module how to configure a name server (BIND being the software offering this service).

Name servers have complete "knowledge about" and "authority over" the sub-domain they have jurisdiction over and this sub-domain is referred to as a zone.

Thus if I start a name server containing all records for the domain QEDux.co.za, my name server will be said to have authority over the QEDux.co.za zone.

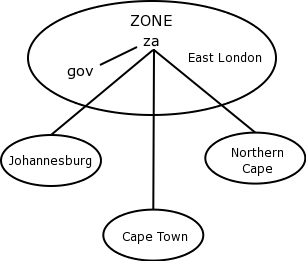

As a more complex example, the "gov.za" domain (which is actually a sub-domain of the "za" domain) may delegate authority for each of the regional governments. In this way, the City of Cape Town is responsible for their domain (capetown.gov.za) and the City of Johannesburg is responsible for their domain (joburg.gov.za).

Each of these regional governments have a "zone" for which they need to keep records. It would make no sense for the City of Cape Town to try to keep records for the City of Johannesburg. By delegating these responsibilities, the individuals and machines responsible for the "gov.za" domain only need keep records of who has responsibility for the capetown and joburg domains. They trust (rightly or wrongly) that Cape Town and Johannesburg will have the savvy to manage their own domain.

In sum then, a zone is a sub-domain for which a name server has a complete set of records (commonly these will be stored in a file on the name server).

What complicates this picture is the fact that some of the regional authorities may not have sufficient skills to maintain their own domain. In this case, the authorities responsible for the "gov.za" domain may administer this domain too. As a result, the zone for the "gov.za" will now include this regional information too.

The zone is thus not restricted to a domain. Figure 2.5 illustrates the zones for this hypothetical gov.za domain.

For the sake of completeness, we will review the process of domain name resolution.

Resolvers (the client side software) is, on the whole, not particularly intelligent. As a result of this, the name server must be able to provide information for domains for which they are authorities, and the name server should also have the ability to search within the name space (all the domains from the root) for information about hosts for which they are not authoritative.

No single name server could possibly hold records for every domain on the Internet. In order to have a point to begin searching the name space, there are a number of root name servers. These servers fulfill the role of containing information about who the authorities for particular domains are. In much the same was that the authority for the "gov.za" domain can not answer queries about what the IP address for host www.capetown.gov.za is, but instead passed the query on to the authority for the capetown.gov.za domain, the root name servers contain information about who to forward the query to.

In effect, the root servers contain only a relatively small number of hosts for which they know the answers (relative that is, when compared to the number or hosts and domains on the Internet as a whole). let's go on a virtual travel trip. Been to Antarctica lately? Go to http://www.aad.gov.au (and while we're there, head for ..... the webcam at www.aad.gov.au/asset/webcams/mawson/default.asp)

Perhaps your name server may have this domain in its local cache, but let's assume for now it doesn't. Since your name server has no knowledge of this domain (i.e. Your name server is non-authoritative for the aad.gov.au domain) it will request information on who the authorities are for the 'au' domain.

To do this at the command line is simple with the dig utility (or nslookup if you don't have dig).

Type:

dig NS au.

|

This should return the names of the servers who hold information about the Australian domains:

;; ANSWER SECTION:

au. 172511 IN NS NS.UU.NET.

au. 172511 IN NS MUWAYA.UCS.UNIMELB.EDU.au.

au. 172511 IN NS BOX2.AUNIC.NET.

au. 172511 IN NS SEC1.APNIC.NET.

au. 172511 IN NS SEC3.APNIC.NET.

au. 172511 IN NS ADNS1.BERKELEY.EDU.

au. 172511 IN NS ADNS2.BERKELEY.EDU.

|

Now query the gov.au domain.

dig NS gov.au.

|

which should return:

;; ANSWER SECTION:

gov.au. 86400 IN NS ns2.ausregistry.net.

gov.au. 86400 IN NS ns3.ausregistry.net.

gov.au. 86400 IN NS ns3.melbourneit.com.

gov.au. 86400 IN NS ns4.ausregistry.net.

gov.au. 86400 IN NS ns5.ausregistry.net.

gov.au. 86400 IN NS box2.aunic.net.

gov.au. 86400 IN NS dns1.telstra.net.

gov.au. 86400 IN NS au2ld.csiro.au.

gov.au. 86400 IN NS audns.optus.net.

gov.au. 86400 IN NS ns1.ausregistry.net.

|

These are the name servers that hold information about the gov.au domains. You will notice that at least one of these hosts (box2.aunic.net) is repeated in both lists. To relate this back to the query issued by your name server on your resolvers behalf:

It (the name server) would have asked one of the root servers for the authoritative name servers for the 'au' domain. That received, it would then ask one of the authoritative name server for the 'gov.au' domain.

Then again for the aad.gov.za domain, which would have produced:

;; ANSWER SECTION:

aad.gov.au. 84727 IN NS alpha.aad.gov.au.

aad.gov.au. 84727 IN NS ns1.telstra.net.

|

Now that your name server knows whom to ask for information about www.aad.gov.au, and the fact that this is the authoritative server for this domain, it can go ahead and ask it's question:

dig A www.aad.gov.za

|

which should return:

;; ANSWER SECTION:

www.aad.gov.au. 1556 IN A 203.39.170.21

|

Notice that I can leave off the trailing full-stop here. In fact, I could have done that from the start.

Furthermore, in the previous dig commands, we were querying for the name server (NS) records. Now, if we were to query www.aad.gov.za for its name server record, we would get nothing returned so instead, we query for the address (A) record for this host, and we get it.

While we are required to undergo this process for each new site we visit, if we had to do this for every site we revisit, it would be very cumbersome and slow.

Fortunately, the designers of DNS anticipated this and included a caching mechanism in the design of name servers. As a result, when we query a site for the first time, we would consult the root name server, then those for the 'au' domain, then the 'gov.au' domain, then the 'aad.gov.za' domain etc. But as these answers are returned, our name server caches them, just in case we ask again anytime soon.

Of course, we do, and now, voila our query is so much quicker since most (if not all) of the time consuming DNS querying work has been done already. we'll talk about caches later in the module when we configure our name server.

we've discussed the forward query process where the names are converted into IP addresses.

we've still not discussed the reverse process - that of converting IP addresses back into names. Why would we need this functionality? Well, in the simplest case, we may wish to record in the log files of our web server, the names of the hosts that are visiting our webcam site.

Since the Internet only really talks at the network layer, the web server will only get 'hits' from an IP address. This is not very handy for the poor web administrator. She'll simply have to give her boss a list of IP addresses of the hosts that visited, with little or no knowledge of where those visitors came from.

With reverse DNS mapping, the web server could simply look up the name of the visiting IP address and replace the IP by the name in its log files.

This would make reporting a whole lot easier and provide valuable information. let's use dig again, this time to convert an IP address into a name.

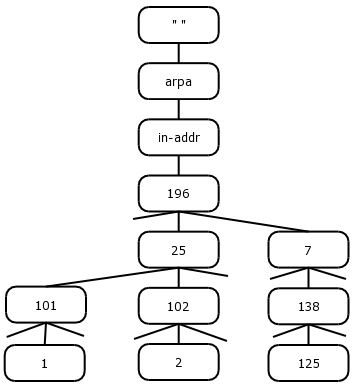

Reverse mapping is similar to name to IP mapping described above, except for the following differences:

The first tier domain is arpa, short for the Advanced Research Projects Agency, the people responsible for first conceiving and building the Internet.

The second tier domain is in-addr, short for Internet Address (IP address is the term we've used till now)

Consider the IP address 196.25.102.2.

Here there is a host ".2" on a network "196.25.102". As before, the 196.25.102 is the least specific entry in this tree (there could in fact be many hosts on this network), while the most specific entry would be the host address ".2".

Refer to the Figure 2.6.

As before, we can reverse map this using the dig command.

Begin by typing:

dig in-addr.arpa NS

|

which, should return all the root servers:

;; ANSWER SECTION:

in-addr.arpa. 84974 IN NS M.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS A.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS B.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS C.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS D.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS E.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS F.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS G.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS H.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS I.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS K.ROOT-SERVERS.NET.

in-addr.arpa. 84974 IN NS L.ROOT-SERVERS.NET.

|

Notice that they (the root servers) all know about the in-addr.arpa domain, and so they should!

Now try:

dig 196.in-addr.arpa NS

|

which, should yield:

;; ANSWER SECTION:

196.in-addr.arpa. 84987 IN NS henna.ARIN.NET.

196.in-addr.arpa. 84987 IN NS indigo.ARIN.NET.

196.in-addr.arpa. 84987 IN NS epazote.ARIN.NET.

196.in-addr.arpa. 84987 IN NS figwort.ARIN.NET.

196.in-addr.arpa. 84987 IN NS ginseng.ARIN.NET.

196.in-addr.arpa. 84987 IN NS chia.ARIN.NET.

196.in-addr.arpa. 84987 IN NS dill.ARIN.NET.

|

And again:

dig 25.196.in-addr.arpa NS

|

yielding:

;; ANSWER SECTION:

25.196.in-addr.arpa. 74403 IN NS igubu.saix.net.

25.196.in-addr.arpa. 74403 IN NS sangoma.saix.net.

|

And again:

dig 102.25.196.in-addr.arpa NS

|

yields:

;; ANSWER SECTION:

102.25.196.in-addr.arpa. 31112 IN NS \

quartz.mindspring.co.za.

102.25.196.in-addr.arpa. 31112 IN NS \

agate.mindspring.co.za.

|

Finally,

dig 2.102.25.196.in-addr.arpa PTR

|

yields:

;; ANSWER SECTION:

2.102.25.196.in-addr.arpa. 42951 IN PTR \

quartz.mindspring.co.za.

|

Why did we write the IP address in reverse here?

Well, it was not really in reverse, it followed the same convention as with the name, using the most-specific part of the name first, and the least specific part at the end (www.QEDux.co.za). Referring to figure 5 again, you've just done a similar thing as with the name, only now using the IP address and ending the name with "in-addr"."arpa".""

Due to the fact that DNS is so important in the workings of the Internet, it would make no sense to have only one name server per domain or zone.

As a result, DNS servers generally work in pairs - a master name server and a slave name server. Master name servers are, in fact, no more or less important than slave servers in their job or answering queries. Their primary difference is that the master server is the one that contains all the zone files. we'll get to set up a master shortly, so hang in there.

Since it would make no sense to maintain two copies of the zones files (one on the master and one on the slave), on startup, the slave server will do a zone transfer from the master. In effect, this obviates the need for maintaining two sets of files on two machines.

When the master server starts, it reads the zone files, and immediately begins answering queries. When the slave starts up, it transfers the zone information from the master, and only once it is complete, does it begin answering queries.

In the event that the master is not available when the slave starts up, BIND can be configured to read the zone file from a backup that was stored on the last zone transfer.

Of course, there has to be a timeout on the slaves information otherwise the slave could continue answering queries about a host long after the host has been reconfigured or decommissioned.

Right, enough theory, let's get down to it.

we'll begin by configuring the master server, and once that is working fine, we'll configure the slave.

BIND has two primary areas of configuration.

The file responsible for controlling BIND usually resides in /etc/named.conf. Within this file, the administrator can define where the zone files will reside.

I like to stick to a standard, and that is to keep all the zone files in /var/named. Of course you needn't follow me - that's entirely up to you!

Configuring BIND can be just that - a bind. So, I will try to highlight areas that will potentially be problematic.

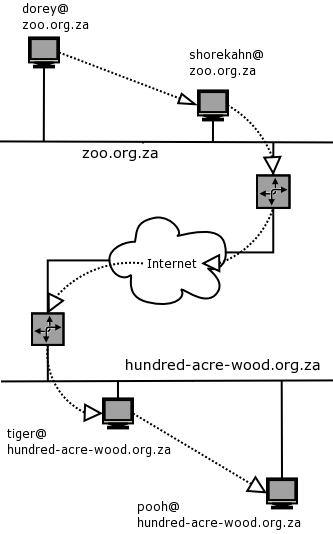

For the purpose of this course, I've decided we'll create a domain called zoo.org.za. For this purpose, I've repeated the network diagram we showed earlier again. Keeping it handy while reading this section will make your life a little easier.

The zoo will contain some of my favorite animated cartoon characters from some of my childhood movies [you will see Nemo, Marlin and Dorey here too - and from that you can draw your own conclusions!-)]

The structure of zone files is essentially the same irrespective of the zone you're configuring. A zone file will contain a set of records that pertain to that zone. These are called resource records.

We will obviously need a zone file for the name to IP address records and another for the IP address to name records. Then again, we need a set of zone files for each new zone we create.

Within our zoo.org.za domain, if we have a felines sub-domain (i.e. Felines.zoo.org.za) we'll need another set of zone files for this sub-domain. But I'm running ahead.

To begin, we'll create the forward zone files (name to IP address). we'll also do everything the 'long-winded' way now, and show you abbreviations at the end.

![[Important]](../images/admon/important.png) | Important |

|---|---|

Resource record begin in column 1 always - no spaces, tabs or other funny stuff or your zone file will not work. | |

This is for zoo.org.za.zone :

zoo.org.za IN SOA baloo.zoo.org.za. \

mowgli.zoo.org.za. (

2004022201 ; serial number

3h ; refresh

1h ; retry

1w; expire

1h ; minimum

)

|

The line begins with the domain we are responsible for - the start of our authority.

Notice how I have specified it in lower case. Case to DNS is unimportant, although it is preserved.[3]

This is an INternet (IN) record type. There are other record types, but for all practical purposes, you will probably only ever deal with Internet record types.

Since we are going to be the authority for this domain, this record is really telling all other name servers that this is our Start of Authority (SOA).

Next we specify the primary master name server for this SOA. In this case, baloo is the primary master name server for the zoo.org.za domain.

![[Important]](../images/admon/important.png) | Important |

|---|---|

You will notice that I have written the name as baloo.zoo.org.za. - a full stop after the za. I'll explain why shortly. ??????<xref to page 18>??????? | |

Then we specify the system contact for this domain. In place of the @ sign, we put a full stop, since @ in the zone file has special significance. So, in this example, mowgli@zoo.org.za is the contact for this zone. If you're having a problem with our zone, contact mowgli.

From here on, everything in the round brackets is merely information for the secondary name servers (the slaves). we'll explain more on the meaning of these when we set up the slave server.

The next entries relate to describing the name servers for this zone. Notice again that I include a full stop on the end of the FQDN for the two name servers.

You could of course have 3 or more name servers too, but let's not get too fancy.

zoo.org.za IN NS baloo.zoo.org.za.

zoo.org.za IN NS ikki.zoo.org.za.

|

Again these resource records are for the zoo.org.za domain. They are Internet records (IN) and they are the name servers (NS) for this domain.

Finally, we list the two name servers as baloo and ikki.

That done, we can now start describing the resource records for all the other hosts in our domain.

bagheera.zoo.org.za. A 192.168.10.7

sherekahn.zoo.org.za. A 192.168.10.16

baloo.zoo.org.za. A 192.168.10.1

ikki.zoo.org.za. A 192.168.10.43

|

Now, since this is the zone file for this domain, even having other IP addresses in this domain doesn't affect where we put these resource records.

tweetie.zoo.org.za. A 172.16.43.159

raksha.zoo.org.za. A 172.16.43.146

ikki.zoo.org.za. A 172.16.43.10

nemo.zoo.org.za. A 157.236.144.29

dude.zoo.org.za. A 157.236.144.101

marlin.zoo.org.za. A 157.236.144.93

ikki.zoo.org.za. A 157.236.144.1

|

Having defined our zoo.org.za zone file, we should be set to start our name server but remember that I said DNS does both forward lookups (name to IP address) AND reverse lookups (IP address to name). Since these are described in different 'trees' (see Figure 2.4), we still have not described the reverse zone file.

I like calling my reverse zone files as follows. If I'm describing the range 192.168.10.x then the file is called:

192.168.10.zone

|

In this file, we need to define a SOA record as before, with one slight modification.

Whereas previously we were describing the zone zoo.org.za, here we are defining the zone 10.168.192.in-addr.arpa. So my SOA for this zone file reads as follows:

10.168.192.in-addr.arpa IN SOA baloo.zoo.org.za. \

mowgli.zoo.org.za. (

2004022201 ; serial

3h ; refresh

1h ; retry (1 hour)

1w ; expire (1 week)

1h ; minimum (1 hour)

)

|

We need to define the name server records as well as individual resource records for each of the hosts in the forward mapping zone file. I've listed my entries below:

; This entry describes the reverse mappings \

in the 192.168.10.zone file

10.168.192.in-addr.arpa. IN NS baloo.zoo.org.za.

10.168.192.in-addr.arpa. IN NS ikki.zoo.org.za.

1.10.168.192.in-addr.arpa. IN PTR baloo.zoo.org.za.

7.10.168.192.in-addr.arpa. IN PTR bagheera.zoo.org.za.

16.10.168.192.in-addr.arpa. IN PTR sherekahn.zoo.org.za.

43.10.168.192.in-addr.arpa. IN PTR ikki.zoo.org.za.

|

What is different about entries in this zone file from those in the forward zone file described above is the fact that these are pointer (PTR) records instead of address (A) records as before.

We can create similar files for the 157.236.144 network and the 172.16.43 network. I'll leave those to you as an exercise.

Now that we have all our zone files we're ready to begin serving name requests.

Well, not quite . . .

We need a couple of additional zone files. By default, every host on the Internet needs an address of 127.0.0.1. This is the interface referred to as the loopback.

To look at the loopback on your host using the command:

ifconfig lo

|

The loopback is used by applications on the local machine to talk to other applications locally. In order for this to continue to work uninterrupted, you will need to include two additional zone files. They are generally included as part of the BIND install, but if not, copy them from the files named.local and 127.0.0.zone as part of this course.

The last zone file is the root zone file. (boy this DNS configuration is really long-winded!).

We may need an updated root zone file as the one shipped with BIND may be a little outdated. It's your responsibility as the DNS administrator to keep this file up-to-date.

In the default install of BIND it should be called something like named.ca or root.ca, which should be sufficient to get us going.

Now that all our zone files are configured and ready, we need to modify the main named configuration file - named.conf. This usually resides in the /etc directory.

There are many options we can include in this file, but for now, we'll restrict ourselves to those options that will ensure we get a name server running ASAP.

The options directive specifies global options for the name server. Entries will include parameters such as the directory containing your zone files, specification of the forwarder, whether to forward first or forward only, etc.

Then, for every zone we are responsible for, we need a zone entry, and zone entries take the form:

zone "domain_name" [ ( in | hs | hesiod | chaos ) ] {

type master;

file path_name;}

|

Of course, there are many more options that comprise the zone statement, but I've deliberately left them out as I don't want to complicate life too much right now.

Translating this syntax into a practical example:

zone "zoo.org.za" IN {

type master;

file "zoo.org.za.zone";};

|

![[Important]](../images/admon/important.png) | Important |

|---|---|

Remember to finish each directive and parameter with a semi-colon (;). If you don't you'll experience problems that are often difficult to detect. | |

The rest of the zones are similar in fashion to the one show above, but I'll include them here to illustrate the reverse mapping named.conf entries.

zone "10.168.192.in-addr.arpa" IN {

type master;

file "192.168.10.zone"; };

|

Now you will need to add the extra zone entries for the other networks on your pretend network. Remember to include entries for the loopback zone and the root zone as I have included them below.

Zone "." IN {

type master;

file "named.root"; };

zone "localhost" IN {

type master;

file "named.local"; };

zone "0.0.127.in-addr.arpa" IN {

type master;

file "127.0.0.zone"; };

|

A point worth noting here is that the root zone, is represented as a full stop ( . ), since the real representation of "" would look a little confusing.

I would really suggest that you keep an eye on your syslog or messages file in /var/log, as this will spit out the errors when or if they occur.

You can even run bind in the foreground so that errors are sent to the console instead of to a log file.

/usr/sbin/named -g -d 1 -c /etc/bind/named.conf

|

This will start named in the foreground, spitting all error/log messages to the console.

Okay folks. we're ready to start our DNS server now and starting named is quite simple.

/usr/sbin/named -g -d 1 -c /etc/bind/named.conf

|

This will start named in the foreground, spitting all error/log messages to the console.

Now our server is read to answer queries about our domain, so let's ask some using dig.

dig @my-DNS-server-IP-Address ikki.zoo.org.za A

dig @my-DNS-server-IP-Address nemo.zoo.org.za A

dig @my-DNS-server-IP-Address -x 157.236.144.81

dig @my-DNS-server-IP-Address panther.zoo.org.za

|

Your DNS should be capable of resolving these without any problems.

Humph. It did not work, so where to from now?

There are a number of BIND tools that you can use, some of which I'll review later.

However, right now you have a problem. Your DNS is not starting up or, if it is, it's not answering the questions properly. BIND comes bundled with a couple of useful utilities.

Most notably the named-check{zone,conf} syntax checkers which, will by no means pick up every problem in your named.conf file or your zone files, but they will assist you in isolating the really obvious ones.

So, let's run named-checkconf on our /etc/bind/named.conf file:

/usr/sbin/named-checkconf -t /etc/bind

|

By default, this utility will check the named.conf file. It will spew out errors if you have any, otherwise it will be completely silent and just return you to your prompt.

Then we need to check our zone files using the named-checkzone utility.

/usr/sbin/named-checkzone -d \

10.168.192.in-addr.arpa 192.168.10.zone

|

which, should return:

loading "10.168.192.in-addr.arpa." \

from "192.168.10.zone" class "IN"

zone 10.168.192.in-addr.arpa/IN: loaded serial 2004022301

OK

|

If there are problems in your zone files, this should catch the majority of them.

You will have noticed that each time we do a lookup on a name we need to type the entire name.

Now we don't necessarily want to do this each time, as it expends valuable carpel energy!!!! For a simpler lookup, we can modify our /etc/resolv.conf file to accommodate the domain. The domain option in the resolv.conf file is not absolutely necessary, since the nslookup and the dig commands will use the hostname, remove the domain part and use this to look up the FQDN.

Thus:

nslookup ikki

|

would look up:

ikki.zoo.org.za

|

When using dig, the syntax is slightly different:

dig +search ikki

|

The search option will use the searchlist or the domain directive in the resolv.conf file, in a similar way to nslookup.

How do we get this functionality?

The resolv.conf file has a number of directives that may be used to speed up or change the way lookup answers are returned.

This directive defines the name server that will be used to do DNS queries. There can be up to MAXNS records in this file.

![[Note]](../images/admon/note.png) | Note |

|---|---|

MAXNS is a constant that is set in the C header include file for the resolver. To see how many MAXNS is on your host, look in the resolv.h header file. This file resides in /usr/include on my system, but your mileage may vary depending on your version of Linux. MAXNS is set to 3 for me. | |

The reason for having more than a single entry is for some degree of redundancy/load sharing.

In addition, an option "rotate" may be used which will rotate the use of the nameserver directive should there be more than one entry.

This directive allows the resolver to search through the list of defined domains in order to do a name lookup. An entry may look as follows:

search zoo.org.za co.za com

|

Thus:

dig +search ikki

|

will try name lookups as follows:

ikki.zoo.org.za

ikki.co.za

ikki.com

|

The first to match will be returned. Note though, that this can be slow and network resource hungry, especially if the name server is remote to your client. So, if you are dialing up at 9600 baud, don't set this option [believe it or not, we here in South Africa, still have some people dialing up at this archaic speed!]

The domain directive and the search directive are mutually exclusive. The domain directive will be used by appending it to the hostname specified on the command line.

Typing:

dig +search ikki

|

with the domain directive set to QEDux.co.za, will always look up the name:

ikki.QEDux.co.za

|

This is less resource intensive than the search command and you are ill advised to use both of these directives in your file. Read resolv.conf(5) for details on these directives.

In the case of our DNS records, ikki.zoo.org.za has 3 different entries in the DNS, since it is multihomed. Which one should be returned?

Suppose for a minute that I am on the 157.236.144 network. Clearly, I don't want the 192.168.10 ikki address to be returned at the top of the list when doing a query.

Similarly, I would be unwise to have the 172.16.43 address returned at the top of the returned addresses. For this reason, the sortlist directive will sort returned answers according to a specified set of criteria.

My sortlist directive is set as follows:

sortlist 157.236.144/255.255.255.0 172.16.43/255.255.255.0

|

This should solve the problem. Since each of these directives can be set on a per host basis, they needn't be the same on every host.

In sum then, my resolv.conf file will look as follows:

; just to complicate matters, there is NO full stop on the end

; of the domain directive. Semicolons on this file are taken to

; be comments.

domain zoo.org.za

sortlist 157.236.144/255.255.255.0 172.16.43/255.255.255.0

nameserver 192.168.10.43

nameserver 196.7.138.45

nameserver 66.8.48.243

options rotate

|

Now that we've configured and run our master server successfully, it's time to create some shortcuts.

The zone directive in the named.conf file specifies the domain for this zone. In our examples, we had zone statements as follows:

zone "zoo.org.za" IN {

type master;

file "zoo.org.za.zone";

};

or

zone "144.236.157.in-addr.arpa" IN {

type master;

file "157.236.144.zone";

};

|

Within our zone files, we had entries as follows:

baloo.zoo.org.za. IN A 192.168.10.144

bagheera.zoo.org.za. IN A 192.168.10.7

sherekahn.zoo.org.za. IN A 192.168.10.16

|

Earlier, we stressed the importance of not leaving off the full stop on the end of the FQDN in the zone files. Now we can explain why.

When BIND uses these files to answer a DNS query, it appends the domain name onto the end of the name returned if and only if, there is NO full stop on the end of the name.

Thus, setting up the zone file as follows:

baloo.zoo.org.za IN A 192.168.10.144

bagheera.zoo.org.za IN A 192.168.10.7

sherekahn.zoo.org.za IN A 192.168.10.16

|

A query for sherekahn.zoo.org.za will return sherekahn.zoo.org.za.zoo.org.za, since the trailing full stop has been omitted. Not quite what we had in mind!

Now, we can modify our zone files as follows (for the zone zoo.org.za):

baloo IN A 192.168.10.144

bagheera IN A 192.168.10.7

sherekahn IN A 192.168.10.16

bear IN CNAME baloo

panther IN CNAME bagheera

|

or our reverse zone files as follows (for the zone 144.236.157.in-addr.arpa):

112 IN PTR nemo.zoo.org.za.

92 IN PTR dude.zoo.org.za.

81 IN PTR marlin.zoo.org.za.

|

Since the domain is specified in the zone directive of the named.conf file, we are able to omit the full specification. Note here that if we wish to specify resource records in this format, we will need to omit the domain as well as the ending full stop.

The most useful of the directives is the $ORIGIN directive.

This directive can be placed at the beginning of a section in the zone files, which shortens both the amount of typing you need to do to set up the DNS and makes it a lot easier to read.

The $ORIGIN persists until another $ORIGIN directive is encountered.

By default, in the absence of a $ORIGIN directive, the zone directive in the named.conf file specifies the domain name.

An example shown below illustrates the use of $ORIGIN:

$ORIGIN zoo.org.za.

Bagheera A 192.168.10.7

baloo A 192.168.10.1

bear CNAME baloo

or

$ORIGIN 43.16.172.in-addr.arpa.

10 PTR ikki.zoo.org.za.

146 PTR raksha.zoo.org.za.

|

This could be used when we create sub-domains.

Initially, we could start with a domain zoo.org.za, and within this zone file, change the ORIGIN to felines.zoo.org.za

Another shortcut is using the @ instead of the domain name.

If the domain name is the same as that specified in the zone directive, then simply using the @ will be equivalent to the domain.

If the zone is "43.16.172.in-addr.arpa", then we could represent this in the zone file as:

@ IN SOA baloo.jungle.org.za. mowgli.jungle.org.za.\

(

2004022406 ; serial

10800 ; refresh (3 hours)

3600 ; retry (1 hour)

604800 ; expire (1 week)

3600 ; minimum (1 hour)

)

NS ikki.zoo.org.za.

NS baloo.zoo.org.za.

|

where the @ is used to refer to the zone.

Notice too that for the NS resource records, there is no domain specified. This is because the domain is inherited from the previous entry (which in this case was the @, which in turn was "43.16.172.in-addr.arpa").

The NS resource records could have been written as:

43.16.172.in-addr.arpa NS ikki.zoo.org.za.

43.16.172.in-addr.arpa NS baloo.zoo.org.za.

|

Or alternatively as:

@ NS ikki.zoo.org.za.

@ NS baloo.zoo.org.za.

|

The time to live is the amount of time a name server can cache a record. Cached for too long and the data may become stale. Cached for too short a time and the zone transfers will consume network bandwidth. Clearly we need to set an optimum time for an entry to remain in the cache. Remember too that both positive and negative replies will be cached, but both need a time to live.

There are a couple of places to set the time to live. In the SOA resource record, the last entry specifies the TTL. This is the time to keep NEGATIVE responses to a query.

Then there's the $TTL variable, which can be set at the top of the zone file.

$TTL 3h

|

would allow records (both positive and negative answers) on the slaves to be cached for a maximum of 3 hours.

Finally, each resource record entry can contain a time to live. This will for only that entry to the timed out sooner or later. One of the uses of this is when you know a host will be changing networks. Thus, 3 hours may be a little long before updating the slave servers.

One could easily add an entry as follows:

dude 1h IN A 157.236.144.92

; explicit make the TTL of this RR 1 hour

|

we've spent a great deal of time configuring the master server. Now that it's up and running, let's turn our attention to the slave name server(s). In a network, we can only have a single master server, but we can have multiple slaves.

The larger ISP's will, on the whole, have many slaves to ease the load across the network, which could potentially be a bottleneck.

As mentioned previously, the slaves are in no way less important than the masters, and slaves in their own right can be masters of a different zone. In this way, one master can be the slave of a master from another zone.

The primary difference between the master and the slave however is the fact that slaves get their information from masters by the process of zone transfers. we'll see zone transfers occur as soon as our slave is going.

Slaves are really easy to configure, since they don't need any zone files. Well, not quite. They do require zone files for the localhost and 127.0.0 zones. Since these files are standard across all name servers, and since it makes little sense to transfer this zone between one name server and the next, they should be present on the slave. Again, they will probably reside in /var/named (on Debian they are in /etc/bind/).

We will also require an /etc/named.conf (on Debian again that's in /etc/bind/named.conf).

For the other zones, the slave will transfer them from the master automatically.

The primary differences in the layout of the named.conf files is as follows:

Slave name servers do not have:

type master;

|

in their zone entries but rather

type slave;

|

Slave servers specify a slightly different name for the zone files they draw from the master, although this is not a prerequisite.

file "bak.172.16.43.zone";

|

or

file "zoo.org.za.backup.zone";

|

A masters keyword indicate who the master server is for this zone:

masters { 157.236.144.1; };

|

So a zone entry for the zoo.org.za zone would look as follows:

zone "zoo.org.za" {

type slave;

file "zoo.org.za.backup.zone";

master { 157.236.144.1; };

}

|