(to the power of -1)

Copyright © 2004 The Shuttleworth Foundation

Unless otherwise expressly stated, all original material of whatever nature created by the contributors of the Learn Linux community, is licensed under the Creative Commons license Attribution-ShareAlike 2.0.

What follows is a copy of the "human-readable summary" of this document. The Legal Code (full license) may be read here.

You are free:

to copy, distribute, display, and perform the work

to make derivative works

to make commercial use of the work

Under the following conditions:

Attribution. You must give the original author credit.

Attribution. You must give the original author credit.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

Share Alike. If you alter, transform, or build upon this

work, you may distribute the resulting work only under a license identical to this one.

For any reuse or distribution, you must make clear to others the license terms of this work.

Any of these conditions can be waived if you get permission from the copyright holder.

Your fair use and other rights are in no way affected by the above.

This is a human-readable summary of the Legal Code (the full license).

2005-01-25 22:20:35

| Revision History | |

|---|---|

| Revision 0.0.1 | 01/NOV/2004 |

| Initial version | |

Table of Contents

- 1. Introduction and History

- 2. Booting

- 3. Logging in

- 4. The Kernel versus Process Management

- 5. Memory Management

- 6. Drivers

- 7. System Tuning

- 8. Tips and Tricks

- A. Referances

- Index

List of Figures

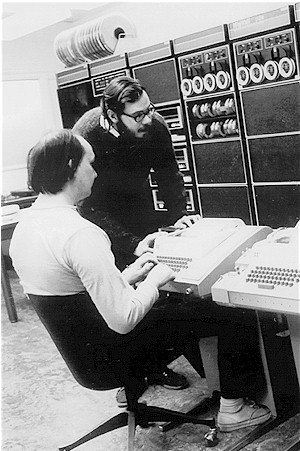

- 1.1. PDP 7 with teletypewriter

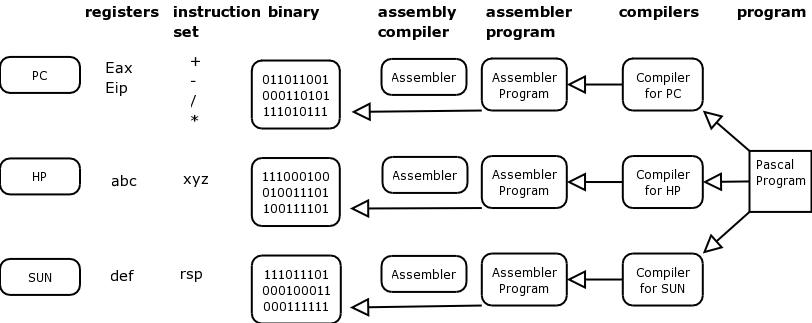

- 1.2. Relationship between hardware, assembler and a compiler

- 1.3. Dennis Richie and Ken Thompson working on a PDP-11.

- 1.4. Professor Andy Tannebaum

- 1.5. Linus Torvald

- 1.6. Tux, the Linux mascot

- 1.7. The BSD mascot

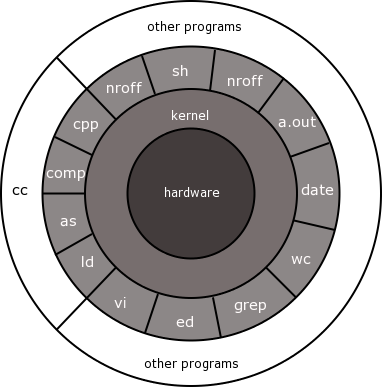

- 1.8. Operating System Layers

- 1.9. Bootloader in memory

- 1.10. Kernel loaded into memory

- 1.11. Kernel memory and User Memory

- 1.12. Kernel Memory, table and buffer cache allocations

- 4.1. The Layers of the Operating System

- 4.2. Separate fields are interpreted by the shell

- 4.3. Process Life Cycle

- 4.4. The Scheduler

- 4.5. Round-Robin Scheduling

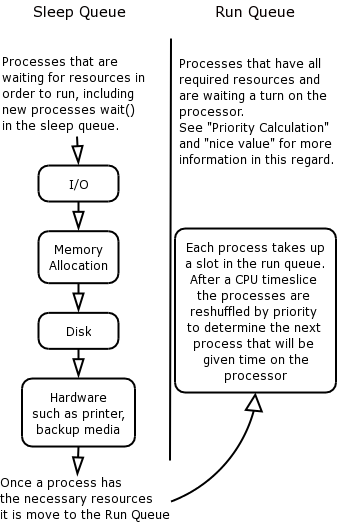

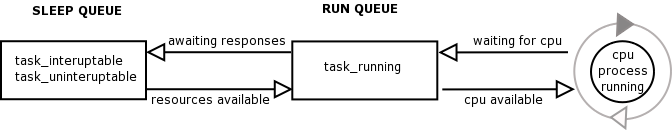

- 4.6. Sleep Queue and Run Queue

- 4.7. Multitasking flow

- 4.8. Time-slicing

- 5.1. Kernel Memory, table and buffer cache allocations

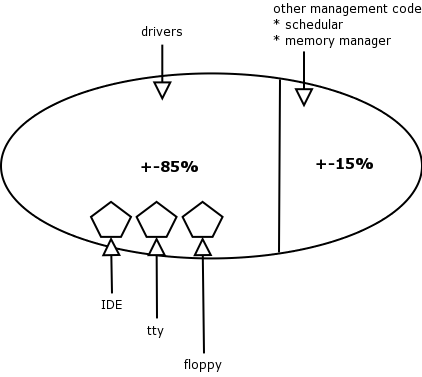

- 6.1. Kernel Binary

- 6.2. Loadable modules

- 6.3. Block and Character Device Drivers

- 7.1. Let us look again at the sleep queue

- 8.1. Hard disk partitions

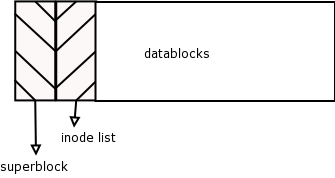

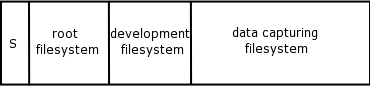

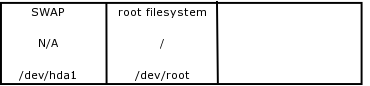

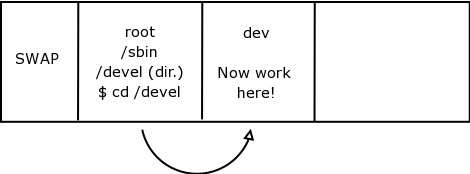

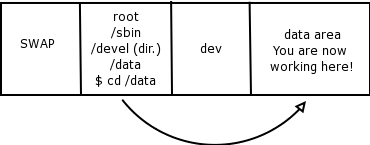

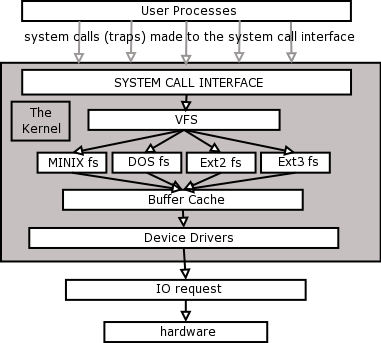

- 8.2. Generic Unix Filesystem Support

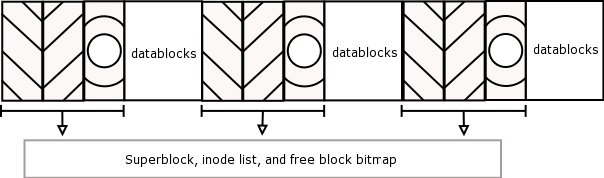

- 8.3. Inode List

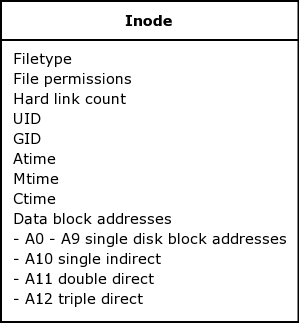

- 8.4. Free Blocks Bitmap (Extension to Inode List Figure)

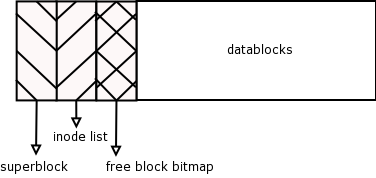

- 8.5. Block Groups

- 8.6. Filesystems

- 8.7. Mounting filesystems

- 8.8. /dev/hda3 - Where are you working now?

- 8.9. /dev/hda4 - Where are you working now?

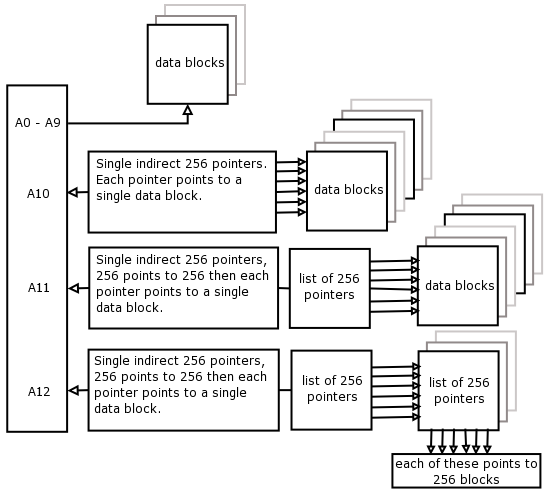

- 8.10. Filesystem Structure

- 8.11. Datablock addressing in the inode

- 8.12. The Virtual Filesystem

List of Tables

Table of Contents

This part of the course is intended to bridge the gap between the courses that you have attended and the more advanced/internals-based knowledge required for the true understanding of the advanced topics and supporting a UNIX or Linux system.

Please take note that this is a course of technical concepts written in simple terms to assist with the understanding of how the internals of the operating system hangs together - it is intended to assist with the administration of the operating system itself and the theory can be applied to both the UNIX and the Linux operating systems.

There are many good books written about the in-depth internal functions of Unix and of Linux, I do not want to re-invent that particular wheel, what I would wish to achieve is an advanced course that shows the internal workings of the system in an approachable fashion so that anyone can use this course to learn.

Throughout the course there are sections that were compiled in conjunction with Sebastian Spitzner.

After training Unix and Linux for many years he feels that this method of lecturing is the most approachable way of explaining the lower level information without becoming too entrenched in the "nitty-gritty" details, like knowing the hex addresses in memory where the kernel keeps its various data structures, which is of little practical use.

Take some serious time to read through and understand the history lecture, it has been structured to give you a fuller understanding of the roots of the Unix and Linux operating systems.

Unix has managed to influence every operating system available today.

It seems that most of the people who want to work in, or who actually work in Linux do not know the history of the operating system and as you will see, it will give you a greater understanding of the software.

In short, Linux is an operating system based on UNIX (Developed by AT&T's Bell Labs division), which is based on MULTICS.

The following timeline will explain the main events that have affected the UNIX family of operating systems, of which Linux is one.

We pick up our history in the 1950s, when the first important event that affected UNIX took place.

![[Note]](../images/admon/note.png) | Note |

|---|---|

TTYs and Line-oriented Text Display which was the general input and output devices of the PDP 7 | |

The term "tty" stands for "teletypewriter", which was an early form of terminal.

Teletypewriters, such as the one shown in the picture of the PDP-7 REF, were merely automatic typewriters producing hard-copy line-based output on continuous paper.

In these early days of computing, this kind of terminal output did not allow screen or cursor-based programs to function.

Hence the first text editors were "line-oriented", such as "ed" and later "ex". "Vi" was developed later, based on "ex", and was screen-oriented. It used the redrawable ability of cathode ray tube (CRT) displays to show text one screen at a time.

The US government passed a decree imposing a restraint of trade against AT&T. The company was not permitted to make money from non-telecommunications business.

This is significant, because until 1982 (when the US Government finally broke up the AT&T telecommunications monopoly into smaller companies), AT&T could not sell operating systems, i.e. UNIX, for profit.

This had a great impact on the distribution of Unix as you will see throughout the rest of the History section, as AT&T chose to use the product internally first, and then distributed it to computer research institutions such as Universities.

The Multiplexed Time Sharing and Computing System or MULTICS project was a joint attempt by General Electric (GE), AT&T Bell Labs and the Massachusetts Institute of Technology (MIT) at developing a stable multiuser operating system

The aim is to create an operating system that could support a lot of simultaneous users (thousands!).

Multics stands for Multiplexed Information and Computer service.

The people involved in the project at this time are Ken Thompson, Dennis Ritchie, Joseph Ossanna, Stuart Feldman, Doug McIIroy and Bob Morris.

Although a very simple version of MULTICS could now run on a GE645 computer, it could only support 3 people and therefore the original goals of this operating system had not been met, the research and development is just so expensive and Bell Labs withdraws their sponsorship. This meant that the other interested parties could not afford to carry the project on their own and so they also withdrew.

Dennis Ritchie and Ken Thompson now decide to continue this project on their own.

Ken Thompson Dennis Ritchie wrote a Space Travel Game that was actually a serious scientific astronomical simulation program. However the game was a disaster as the spaceship was hard to maneuver and used a lot of resources to run.

After developing the Space Travel Program they had learnt a lot more. With Canaday involved as well they were able to create the design for a new file system, which they built on PDP-7, called UNICS (Uniplexed Information and Computing Service), and this later became UNIX.

A note to UNIX traditionalists: We use the spelling "Unix" rather than "UNIX" in this course only for the sake of readability.

They attempted using a Fortran program to further develop Unix, but they found that it was not what they were looking for and so they turned to BCPL (Basic Combined Programming Language).

B was developed from BCPL and it was the first high-level language to be used on Unix with a PDP11/20.

Lets draw a diagram of three different machines and then lets take a look at why developing in assembler is not always the best idea:

Remember that all a computer actually does is mathematics.

Am operating system is a "resource allocator" and a "controlling of operations" program.

When computers first started becoming popular we had to use punch cards or load the programs directly into memory manually.

Assembler is machine code and is specific to the machine type and hardware that you are working with. The instruction written for one machine cannot work for another machine at this low level.

A computer has registers and instruction sets, and the instructions are binary coded, the assembly program talks to the machine in assembler which is translated to binary code.

So, if writing a program for a PDP-7 and using assembler, when wanting to move the program to a PDP-11 you would have to rewrite the entire assembler program, this time to suit the machine and hardware type for a PDP-11.

To remedy this, developers invented compilers for application programming tools. In other words if using Pascal to develop, the Pascal compiler for a PDP-7 would translate your program into assembly program and then assembler code for a PDP-7.

If wanting to port that program to a PDP-11, then get the Pascal compiler for a PDP-11 and recompile the original program on the PDP-11. It will then work as above.

This explains why the higher-level languages started being used, such as Pascal, Fortran etcetera. They are there to provide libraries between program and assembler. A compiler would be needed for each specific machine.

These days a compiler automatically generates the assembler code.

So, the first Unix was written in the Assembler program of a PDP-7 machine, as we have now discussed though this is not going to make it easily portable to another type of architecture.

At this stage and because of the success of Unix Bell Labs now chooses to re-sponsor the project.

B is still considered too slow, so the team worked to develop Unix in a faster development program called New B or NB. They could now also afford to upgrade to a later model of the PDP range called a PDP11/45.

The C Programming language was developed in 1972 as a result of Ken Thompson and his team wanting to have a language to write Unix in. Although Ken Thompson worked with C initially eventually they needed more functionality which Dennis Ritchie then added.

It is also at this time that Unix "pipes" are also now developed, and this is seen as a milestone because of the power it added to the system [1]

Unix now had its own language and philosophy. Its power was generated by stringing programs together not by any one individual program.

A quote from "A quarter Century of Unix" by P Salus" states:

write programs that do one thing and do it well.

write programs that work together

write programs that handle text streams, because that is a universal interface.

More and more requests are coming in to AT&T to allow other companies and users to use the Unix system.

At this stage Unix is firmly entrenched at Universities and Colleges and AT&T refusing to supply bug-fixes and support on the system forced users to work together. (The start of Unix User Groups.)

Unix had been sold as a text processing system at AT&T internally and here the developers and users were the same community and therefore got direct feedback for new product and for bugs etcetera, Support was right there in same company, maybe even on the same office floor.

By using research organizations at Universities the bright varsity students got sucked up into this type of company after their studying, this was beneficial to research organizations and they continued to give the system to students.

Unix is still used these days used to teach students computer science.

The US patent office held the rights at this stage.

There are now 500 installations throughout the United States, mainly at Universities.

After 1974 military and commercial enterprises started demanding licenses to use Unix and AT&T decided to close the source and supply only binary distributions.

Berkley UCB did a lot of development on DARPA TCP/IP (bright brains for a good price), and the students also started adding on various other utilities, ultimately deciding to write Unix from scratch. (BSD Unix)

BSD3 utilities are available in System V Unix, when installing the operating system you should be asked if you would like to install the BSD Utilities, they will be installed into the /usr/ucb directory.

Unix, is able to be ported to an IBM 360, an Interdata 7/32 and an Interdata 8/32 proving that Unix is portable to systems other than those manufactured by DEC.

1978 "The C Programming Language" by Ritchie is published.

1978 Bill Joy creates "the "vi" editor a full screen editor, and at the same time he sees the need "to optimize the code for several different types of terminals, he decided to consolidate screen management by using an interpreter to redraw the screen. The interpreter was driven by the terminal's characteristics - termcap was born,". P Sulcas

All other Unixs' branch from these two variants of the Unix code, AT&T Unix and BSD Unix. (See timeline below).

The release of AT&T Version 7 was the start of many of the Unix ports, the 32 bit ports and a product called Xenix, (an SCO and Microsoft joint product, and the fist Unix port that could run on an 8086 chip).

By 1980, AT&T found that the operating system was a viable option for commercial development. Microprocessors were becoming very popular, and many other companies were allowed to license UNIX from AT&T. These companies ported UNIX to their machines. The simplicity and clarity of UNIX tempted many developers to enhance the product with their own improvements, which resulted in several varieties of UNIX.

From 1977 to 1982, Bell Labs combined features from the AT&T versions of UNIX into a single system called UNIX System 3.

Bell Labs then enhanced System 3 into System 4, a system that was only used internally at Bell Labs.

After further enhancements, System V was released and in 1983, AT&T officially announced their support for System V.

1982 Sun developed the Sparc processor, licensed BSD Unix called it SUN OS.

1983/4 Then licensed AT&T System V, made their changes and called that version Solaris. There is a lot of cross coding and an interesting note is that if though if doing the "uname" (uname is a command that supplies details of the current operating system for your interest) command on Solaris the report says SunOS is the operating system.

1985 - Some quotable quotes - "Xenix is the operating system future" and "640 KB memory is enough for anyone"

In 1989, AT&T organized that System V, SUNOS, XENIX, and Berkeley 4xBSD were combined into one product called System V Release 4.0. This new product was created to ensure that there was one version of UNIX that would work on any machine available at that time.

The different versions of UNIX prompted AT&T to form a UNIX International Consortium. The aim of this consortium was to improve the marketing of UNIX, since the market was starting to demand clarity on standardizing the product.

By 1992, UNIX was readily available on an Intel platform, providing mainframe-type processing power on a PC. This made UNIX appealing to the end-user market.

Table 1.1. Major vendors/ hardware and related operating systems.

| Vendor | Hardware | Operating System (Unix based) |

|---|---|---|

| HP | PARisc | HP-UX |

| IBM | RS6000 / Power PC | AIX |

| Digital / DEC / Compaq | Alpha | Digital Unix |

| NCR | DG-UX | |

| SCO | Intel PC Compatible | SCO Xenix, SCO Unix, SCO Open Server 5, UnixWare 7 |

Source code has changed hands a few times:

| year | Owner of Source code |

|---|---|

| 1969 | AT&T |

| 1993 | Novell |

| 1995 | SCO |

| 2001 | Caldera, which started trading under the name "The SCO Group" in 2002 |

![[Note]](../images/admon/note.png) | Note |

|---|---|

| |

1985 Professor Andy Tanenbaum wrote a Unix like operating system from scratch, based on System V standards POSIX and IEEE, called MINIX for i386 for Intel PC aimed at university computer science research students.

MINIX was also bundled with a popular computer science operating system study book by that author. Although the operating system was free the book was to be purchased.

A Finnish student called Linus Torvald first came into contact with Unix like systems through his use of this MINIX at the university of Helsinki Finland in Computer Science.

Linus Torvald wanted to upgrade MINIX and put in features and improvements, but Andrew Tanenbaum wanted Minix the way it was and so Linus decided write his own kernel.

He released Linux on the Internet as an Open Source product and under his own license and then later in 1991 under the GPL.

If you want to travel around the world and be invited to speak at a lot of different places, just write a Unix operating system. | ||

| -- | ||

The FSF (Free Software Foundation), started by Richard Stallman, as a development effort to promote the use of Free Software, Stallman recognized the need to write a free and open source Unix-like operating system so that people could have a Unix system under a non-propriety non-restrictive commercial license

The FSF started a project called GNU to fulfill this aim GNU stands for "GNU is not Unix" (a recursive acronym).

By 1991 GNU had already amassed a compiler (GCC- GNU C Compiler), a C library, both very critical components of an operating system, and all associated generic Unix base programs (ls, cat, chmod etcetera).

They were missing a kernel, which was going to be called the GNU HURD (HURD is not yet complete 2004 April).

The FSF naturally adopted the Linux kernel to complete the GNU system to produce what is known as the GNU/Linux operating system, which is the correct term for all distributions of Linux like Red Hat Linux and SuSE Linux.

1994 Linux 1.0 release

To download the Linux source code, available under the GNU Public license, from the official kernel site.

Remember that would be the kernel or core of the operating system that you would be downloading, if wanting additional functionality then most of that additional functionality that you may want to have will also be available under the GNU Public license.

When installing Linux, the source code is usually stored in /usr/src/linux.

You may find it easier to work with a distribution of Linux that has already put all the required functionality onto a CD-ROM. (Debian, Red Hat, SuSE, Mandrake to mention a few.)

As long as Linux conforms to the POSIX - IEEE Portable operating system standards, it will be compliant with most UNIX type (or other type of apps See Running Programs below) applications. Enabling you to compile and run your UNIX apps on a Linux machine.

Linux conforms to quite a few of the UNIX brands taking the best of breed from products such as SVR4 and Solaris 2.x and using some of the technology such as:

The ability to load and unload kernel modules.

It is possible to mount a file system on Linux, from UNIX instead of the file systems already supplied in Linux (ext2, and ext3), for example the journaling file system from AIX (IBM version of UNIX) or the IRIX file system called XFS (Silicon Graphics). This is hugely powerful, to be able to bring an alien file system to use on the Linux system.

When working in UNIX I always thought that one of its powerful features was that the operating system did not "care" what type of file you were working with. It was always up to how the application or how you accessed the file, but we could load any kind of file on the system and work with it (a DOS file, a DOS database file). We would do a massive amount of shuffling information around using the powerful tools that are available in UNIX itself, and then easily port the changed file back to the originating system.

We could mount any device as a file system and work with the data as required, an excellent way of being able to support our clients.

Well when rooting around for more in-depth knowledge of Linux I found out the following, and I am sure that there is probably more:

Mounting file systems - All versions of MS-DOS and MS-Windows, Solaris, SunOS, BSD and other versions of UNIX (SCO, SVR4 etcetera), OS/2, MAC etcetera (see /usr/src/linux/fs).

Running programs - Executing apps written for MS DOS and Windows, SVR4, other UNIX variants.

In parallel BSD has had its own continuing revolution, around 1990 Free BSD was created as the source code successor to the original BSD code before that it was known as 386BSD.

1994 Linux 1.0 release

Mid 90s FreeBSD bore two spin-offs (known as source code forks) [this happens when certain people decide to take OS development in different direction]. NetBSD and Open BSD all three derivatives are currently maintained today.

Net BSD is aimed at broad platform support and is widespread in the scientific community;

OpenBSD is most popular on PC and is used and focuses on security and is very popular as a firewall platform

There are commercial derivatives of BSD the most popular is BSDi

Standard C library

PAM library - authentication and session management

Curses

The only way that you can get a Unix system to do anything is through system calls, which are a part of the kernel.

This is the API to the kernel, the kernel has approximately 250 system calls that do different things and only binary programs can call these, including the libraries, which are just centralized repositories of binary code

Libraries fulfill two purposes:

They save duplication of the same code that would otherwise be contained duplicated inside many binaries individually; this saves disk space.

Very often library calls provide an abstraction interface to similar system calls to simplify programming tasks for a programmer.

An example for number 1 above:

The three binary programs ping, traceroute and telnet all support a command line argument that is a hostname of a computer on the Internet to connect to. However these programs must format packets sent to IP addresses not hostnames.

Somehow these programs must convert a hostname to an IP address and instead of all three containing the same code they all call the same library call in the standard C library called gethostbyname(). Where they supply the requested hostname that was read from the command line ion the parenthesis of the call.

The C library now does the hard work of issuing multiple system calls to connect to a DNS server on the Internet, send a namelookup query, interpret a response and return the resolved IP address to the calling program (ping, traceroute or telnet).

A Unix kernel fulfills 4 main management tasks:

Memory management

Process management

file system management

IO management

The kernel exists as a physical file on the file system in Linux it is /boot directory and is usually called vmlinux (uncompressed version), vmlinuz (compressed version), or similar filenames commonly with a kernel version number at the end.

For example;

/boot/vmlinuz-2.4.18-22

|

At system boot time RAM only contains the boot loader, consuming a few kilobytes at the beginning of memory.

The boot loader loads the kernel binary into memory from the hard disk, and places it at the beginning of memory.

Once the kernel has been read in the boot loader tells the CPU to execute it by issuing a JMP (Jump) instruction. The kernel now begins to execute

To better understand what the system is doing and how it is doing it is good to visualize what is happening where in memory, remembering that all work on the system is performed in memory (see the section called “Assembler/ compilers / hardware architecture ”).

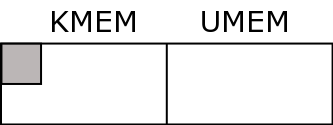

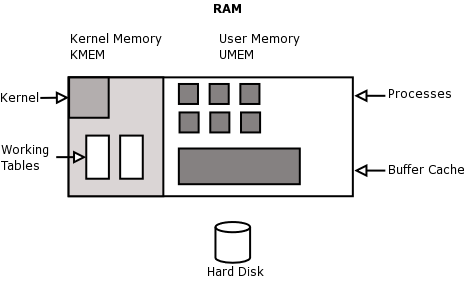

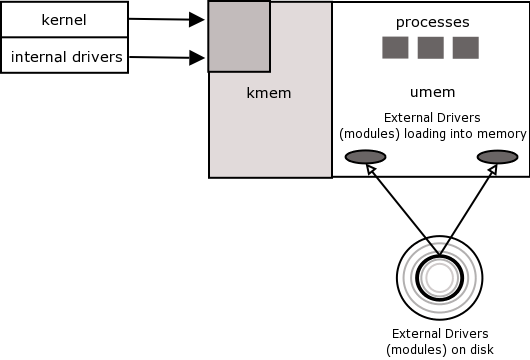

Memory is divided into two areas, kernel memory and user memory

Kernel memory is also known as kmem, kernel space and kernel land

This contains the kernel binary itself, working tables to keep track of status on the system and buffers.

The kernel binary is typically 3 meg big and the working tables consume another 3 meg (only an example; may vary)

Examples of working tables that the kernel keeps in kernel memory for the operation of the system are the Global Open File Table, the Process Table and the Mount Table.

Traditionally once the size of kernel memory has been set on bootup (as determined by the finite set sizes of all the tables) it cannot be resized (System V). Linux has a clever way of overcoming this limitation by allowing kernel tables to grow into user memory as required!

User memory is also known as umem, user space and user land.

This is for the use of user programs.

Memory management is dealt with in more detail in Chapter 5.

[1] C is the most popular programming language developed and the most portable. All the major operating systems have been written in C. i.e. Netware, Ms Windows, (5% still written in assembler to do with drivers). MS Windows is also now been written in C++)

Table of Contents

The kernel un-compresses

The kernel claims a certain amount of memory for its working tables and buffers (kernel memory).

All the driver that are built into the kernel initialize by detecting their respective hardware

The kernel mounts the root file system The kernel mounts the root directory of the root file system to the kernels' idea of a system (superstructure) root directory.

The kernel executes /sbin/init

The kernel now waits for asynchronous events to occur; i.e. It is now ready to serve the system by servicing requests from processes and hardware.

Everything that happens subsequently on the system has to either be requested by a binary through a system call to the kernel, or an asynchronous event triggered by hardware

Let's look at the process of booting up the machine from the time that it is switched on.

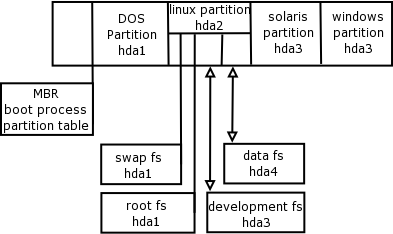

The BIOS does a Hardware Check and then proceeds to read the boot information on floppy, hard disk or CD-ROM as defined.

There are two popular boot loaders available, the first is LILO (Linux Loader) and the second is GRUB (Grand Unified Boot loader).

They are both two-stage boot loaders and will generally be installed on the small part of the hard disk that used to be called the masterboot record (MBR) in the old days.

Once the boot information is found (if found on floppy the process for loading the second boot and the kernel is slightly different) the first part of the boot loader is loaded into RAM and then it jumps into that part of RAM and proceeds' to execute the code and loads the second part of the boot loader.

A map of available operating systems is read from the disk and it is at this time that the user is presented with an opportunity to choose the operating system to use, if there is no response from the user, the boot loader will start the default operating system.

Assuming that LILO is the boot loader program that we have installed in this case, it displays Loading Linux on the screen, copies the kernel image and set-up code into memory and then starts to perform the set-up code.

Once Linux is initialized, the BIOS is no longer important to most of the rest of the boot process as Linux initializes the hardware in its own manner.

The set-up functions as an initializer of hardware and also sets up the environment so that the kernel program can execute, this includes:

Amount of RAM in the system (this it seems to get from the BIOS)

Keyboard rate and delay

Disk controller and disk parameters

Checks for a video adapter card, IBM Micro channel bus and bus mouse.

Checks that the kernel image is loaded correctly

Interrupt Descriptor Table and Global Descriptor table (Provisionally at this stage)

FPU reset (If applicable)

Maps hardware interrupts from 32 to 47 to allow for CPU exceptions

Goes to protected mode

Calls startup_32() function and then terminates

In a nutshell, this table has to store each interrupt (and exception) vector and its interrupt or exception handler. This has to happen prior to the kernel being able to enable interrupts.

80x86 processors require the use of segmentation, Linux prefers to use paging when not required to use segmentation by the architecture of the machine.

Segment Descriptors are held in one of two tables, either the GDT (defined once off) or a Local Descriptor Table (could be defined per process).

This type of exception will occur when the CPU detects an unsolvable condition when attempting to execute a command or an instruction.

| Number | Exception /Handler | Signal |

|---|---|---|

| 0 | Divide Error /divide_error() | SIGFPE |

| 1 | Debug / debug() | SIGTRAP |

| 2 | NMI / nmi() | None |

| 3 | Breakpoint / int3() | SIGTRAP |

| 19 | ... | see the section called “SIGNALS” |

Not all of the types of CPU exceptions can be fixed and the CPU will attempt to create a core dump to report the line of code where the instruction failed.

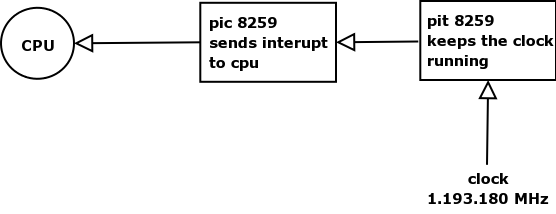

The PIC (Programmable Interrupt Controller) holds the IRQ lines or hardware interrupts from 0 to 15, which the BIOS uses. The interrupts are then mapped from 32 to 47 by the kernel because the CPU uses the other interrupts to use for exceptions. (From 0 to 19)

Although the first startup_32 function is responsible mainly for decompressing the kernel image it also sets up the segmentation registers with initial values and a provisional stack.

At this time "Uncompressing Linux" is shown on the screen Then once the decompression is complete "OK, booting the kernel" displays

The final position of the kernel in memory is then established at 0x00100000, and it is now that the second startup_32 function begins its job, which will include inter-alia:

Segmentation registers get their final values

Sets up the Kernel Mode stack for init - "init" is the father of all process and is also referred to as Process 0, or the swapper.

initializes the Page Tables

Sets bss segment of the kernel to zeros

Fills first reserved page frame with information from BIOS and operating system

Identifies the model of the CPU

(See Memory Addressing - Intel Microprocessors) There are only 6 segmentation registers, a program can re-use a register for different reasons, and in between save the context of the other reason(s) in memory restoring it to the register as needed. Three registers are reserved for, program code, program stack segment and the data segment.

Page tables are stored in memory and are there to match or to map the linear addresses to physical addresses. Prior to starting the paging unit, the kernel has to initialize the paging tables. The paging unit with an Intel processor handles 4 KB pages at a time. A page table is only used for the current virtual memory used by a process (regions).

In UNIX programming, the linear address space is usually partitioned into segments. So the bss segment is used for uninitialized variables. In other words, variables that are not set prior to the program executing, but rather set and unset during the execution of the process as it needs the variable(s). (Could also ref. Text, initialized Data, stack segments for your interest)

Startup_32() then calls the Start_kernel() function.

This section will give you ideas of where to find out information, some of the functions and tables we will cover throughout the course, but some you may have to look around more for yourself if you need the information.

The following are initialized by start_kernel():

Page Tables by paging_init()

Page Descriptors by free_area_init(), kmem_init() and mem_init()

Exception Handlers and Interrupt Vector data structures by trap_init() and IRQ_init()

Caches by kmem_cache_init() and kmem_cache_sizes_init()

System date and clock time_init()

Process 1 called by kernel_thread()

Process 1 executes /sbin/init program (see Logging in)

Many messages are displayed during this process, you would have seen that when doing your installation of the operating system in chapter two of the system administrators course.

When the system stops booting you will see the ever-familiar login prompt on your screen (unless you have started X Windows, in which case the screen will be presented in a graphical display).

Page Descriptors are generally controlled by a page hash table (index of sorts). Used when process calls long file, when lots of page descriptors are loaded into memory hard to find a specific set of data, therefore a page hash table acts as a preliminary index so that only relevant pages of the file are loaded into memory and memory not swamped with irrelevant detail.

(Ref. Slab, slab allocators, general and specific caches) kmem_cache_init() and kmem_cache_sizes_init() are used during system initialization to create the general caches. Specific caches are created by kmem_cache_creat(). Fundamentally slab allocators allow caches to be created because of the same frames of programs being run again and again and rather than reallocating memory each time for the same purpose, the kernel uses a slab allocator (memory algorithm) to store the memory areas in a cache. (most frequently used etcetera is allocated into the algorithm)

(ref. /proc/sys/kernel/threads-max)

An example of a kernel thread is init or process 0. It is a system process that only ever runs in Kernel mode. Some other examples would be the buffer flusher, the swapper, network connection services (keventd, kapm, kswapd, kflushd (bdflush), kupdated, ksoftirqd). These execute single C functions as apposed to a regular process that will use User and Kernel mode and the kernel has to call any C functions used. (See system calls and traps).

The Operating System called Linux consists of multiple programs, the most important one is called "the kernel" or the core of the operating system. The operating system controls the access of the hardware and the relationships with the apps and the users.

Although some operating systems speak directly to the hardware such as DOS, the UNIX and Linux operating systems deal with the hardware through the kernel and a device driver file (discussed previously).

To control the accesses of hardware and other functionality the operating system chooses to operate in either a user-mode or the kernel-mode. We will discuss further implications of this in the section on processes.

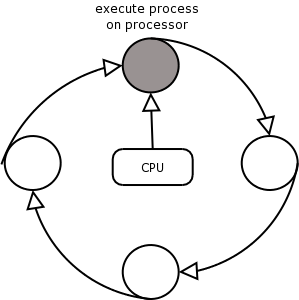

It is said that the Linux operating system is multi-user and multi-processing, and yes a lot of users can access the system apparently all at the same time. However with just one CPU only one process can ever run at a time.

It appears multitasking because it has a method of keeping each process separate, and calculating a fair share of processing time for each process, a system whereby it is possible to monitor the use of resources at all times and to keep usage at an optimum level.

We will cover the process in much more detail after the bootup section of the course, however for our purposes at this time we need to look at a definition that can stand the test of the boot sequence.

A process can be one of two things, either the instance that a program is executed in memory OR the part (context) of the program that is currently executing in memory.

In order to maintain a smooth flow of processor / process time there is a system process available called the scheduler or "sched".

Another process that commences during the bootup sequence is the initializer or "init", as discussed previously, Process 0 the father of all other processes.

Table of Contents

This section is covered in the system administration course, however we are attempting to ensure that you have the inner working details as well as the administration details.

Can you see when you read the man pages that the commands, file structures etcetera are divided into categories that are represented by numbers, for example - shutdown(8).

The following is a list of these categories:

1 Executable programs or shell commands

2 System calls (functions provided by the kernel)

3 Library calls (functions within program libraries)

4 Special files (usually found in /dev)

5 File formats and conventions e.g. /etc/passwd

6 Games

7 Miscellaneous (including macro packages and conventions), \

e.g. man(7), groff(7)

8 System administration commands (usually only for root)

9 Kernel routines [Non standard]

|

This was taken from the man page on "man"

Although you may think that the init program must be very complicated, actually all it does is call the inittab file and follow the instructions therein.

The following descriptions are extracts from the man pages for "init":

Init is the parent of all processes. Its primary role is to create processes from a script stored in the file /etc/inittab. This file usually has entries which cause init to spawn gettys on each line that users can log in. It also controls autonomous processes required by any particular system.

A runlevel is a software configuration of the system which allows only a selected group of processes to exist. The processes spawned by init for each of these runlevels are defined in the /etc/inittab file. Init can be in one of eight runlevels: 0-6 and S or s. The runlevel is changed by having a privileged user run telinit, which sends appropriate signals to init, telling it which runlevel to change to.

Runlevels 0, 1, and 6 are reserved. Runlevel 0 is used to halt the system, runlevel 6 is used to reboot the system, and runlevel 1 is used to get the system down into single user mode. Runlevel S is not really meant to be used directly, but more for the scripts that are executed when entering runlevel 1. For more information on this, see the manpages for shutdown(8) and inittab(5).

Runlevels 7-9 are also valid, though not really documented. This is because "traditional" Unix variants don't use them. In case you're curious, runlevels S and s are in fact the same. Internally they are aliases for the same runlevel.

After init is invoked as the last step of the kernel boot sequence, it looks for the file /etc/inittab to see if there is an entry of the type initdefault (see inittab(5)). The initdefault entry determines the initial runlevel of the system. If there is no such entry (or no /etc/inittab at all), a runlevel must be entered at the system console.

Runlevel S or s bring the system to single user mode and do not require an /etc/inittab file. In single user mode, a root shell is opened on /dev/console. ONLY When entering single user mode, init reads the console's ioctl(2) states from /etc/ioctl.save. If this file does not exist, init initializes the line at 9600 baud and with CLOCAL settings.

When init leaves single user mode, it stores the console's ioctl settings in this file so it can re-use them for the next single-user session.

When entering a multi-user mode for the first time, init performs the boot and bootwait entries to allow file systems to be mounted before users can log in. Then all entries matching the runlevel are processed.

After it has spawned all of the processes specified in /etc/inittab, init waits for one of its descendant processes to die, a powerfail signal, or until it is signaled by telinit to change the system's runlevel.

When one of the above three conditions occurs, it re-examines the /etc/inittab file.

New entries can be added to this file at any time. However, init still waits for one of the above three conditions to occur. To provide for an instantaneous response, the telinit Q or q command can wake up init to re-examine the /etc/inittab file.

When init is requested to change the runlevel, it sends the warning signal SIGTERM to all processes that are undefined in the new runlevel. It then waits 5 seconds before forcibly terminating these processes via the SIGKILL signal.

Note that init assumes that all these processes (and their descendants) remain in the same process group which init originally created for them. If any process changes its process group affiliation it will not receive these signals. Such processes need to be terminated separately.

/sbin/telinit is linked to /sbin/init. It takes a one-character argument and signals init to perform the appropriate action. The following arguments serve as directives to telinit:

0,1,2,3,4,5 or 6 -- tell init to switch to the specified run level.

a,b,c -- tell init to process only those /etc/inittab file entries having runlevel a,b or c.

Q or q -- tell init to re-examine the /etc/inittab file.

S or s -- tell init to switch to single user mode.

U or u -- tell init to re-execute itself (preserving the state). No re-examining of /etc/inittab file happens. Run level should be one of Ss12345, otherwise request would be silently ignored.

It is possible to pass a number of flags to init from the boot monitor (e.g. LILO).

Init accepts the following flags:

Single user mode boot. In this mode /etc/inittab is examined and the bootup rc scripts are usually run before the single user mode shell is started.

The LILO boot loader adds the word "auto" to the command line if it booted the kernel with the default command line (without user intervention). If this is found init sets the "AUTOBOOT" environment variable to "yes". Note that you cannot use this for any security measures - of course the user could specify "auto" or -a on the command line manually.

Init listens on a fifo in /dev, /dev/initctl, for messages. Telinit uses this to communicate with init. The interface is not very well documented or finished. Those interested should study the initreq.h file in the src/ subdirectory of the init source code tar archive.

Init reacts to several signals:

Init looks for /etc/initrunlvl and /var/log/initrunlvl. If one of these files exist and contain an ASCII runlevel, init switches to the new runlevel. This is for backwards compatibility only! . In the normal case (the files don't exist) init behaves like telinit q was executed.

On receipt of this signals, init closes and re-opens its control fifo, /dev/initctl. Useful for bootscripts when /dev is remounted.

Normally the kernel sends this signal to init when CTRL-ALT-DEL is pressed. It activates the ctrlaltdel action.

Examples of programs for specified run level taken from the /etc/inittab file:

Always running in runlevels 2, 3, 4, or 5 and displays login (from getty) on console (tty1)

1:2345:respawn:/sbin/getty 9600 tty1Always running in runlevels 2, 3, or 4 and displays login (getty) on console (tty2)

2:234:respawn:/sbin/getty 9600 tty2Run once when switching to runlevel 3 and uses scripts stored in /etc/rc3.d/

3:3:wait:/etc/init.d/rc 34.Shutdown the machine, with the relevant options when control-alt-delete is pressed

ca:12345:ctrlaltdel:/sbin/shutdown -t1 -a -r now

id:3:initdefault:

|

By default the system must boot to run-level 3.

Sysinit:

si::sysinit:/etc/init.d/rcS

|

The run-level specified here is single-user mode and once reached it calls the "sysinit", which runs all the scripts in /etc/init.d/rcS.

As defined in Debian as /etc/init.d/rcS which then runs all the /etc/rcS.d/S* scripts and then symlinks to /etc/init.d/* and /etc/rc.boot/* (depreciated)

| Start up file | Program | Comment |

|---|---|---|

| README | ||

| S05keymaps-lct.sh | /init.d/keymaps-lct.sh | |

| S10checkroot.sh | /init.d/checkroot.sh | |

| S20modutils | /init.d/modutils | |

| S30checkfs.sh | /init.d/checkfs.sh | |

| S35devpts.sh | /init.d/devpts.sh | |

| S35mountall.sh | /init.d/mountall.sh | |

| S35umsdos | /init.d/umsdos | |

| S40hostname.sh | /init.d/hostname.sh | |

| S40network | /init.d/network | |

| S41ipmasq | /init.d/ipmasq | |

| S45mountnfs.sh | /init.d/mountnfs.sh | |

| S48console-screen.sh | /init.d/console-screen.sh | |

| S50hwclock.sh | /init.d/hwclock.sh | |

| S55bootmisc.sh | /init.d/bootmisc.sh | |

| S55urandom | /init.d/urandom |

A typical /etc/rc3.d/ directory

3:3:wait:/etc/init.d/rc 3

|

| Script | Program called | Comment |

|---|---|---|

| K25nfs-server | /init.d/nfs-server | |

| K99xdm | /init.d/xdm | |

| S10sysklogd | /init.d/sysklogd | |

| S12kerneld | /init.d/kerneld | |

| S15netstd_init | /init.d/netstd_init | |

| S18netbase | /init.d/netbase | |

| S20acct | /init.d/acct | |

| S20anacron | /init.d/anacron | |

| S20gpm | /init.d/gpm | |

| S20postfix | /init.d/postfix | |

| S20ppp | /init.d/ppp | |

| S20ssh | /init.d/ssh | |

| S20xfs | /init.d/xfs | |

| S20xfstt | /init.d/xfstt | |

| S20xntp3 | /init.d/xntp3 | |

| S89atd | /init.d/atd | |

| S89cron | /init.d/cron | |

| S99rmnologin | /init.d/rmnologin |

When changing run levels to run level 3 or down from run level 3, use the scripts just once in the directory file /etc/init.d/rc 3. The scripts starting with an S* (Start) are used at bootup time and the scripts starting with a K* (Kill) are used at shutdown time.

Below are some of the files that would be in this directory /etc/rc3.d:

Each time a process terminates, init records the fact and the reason it died in /var/run/utmp and /var/log/wtmp, provided that these files exist. (See full desciption on Processes Chapter 6).

The file /etc/inittab contains the background programs that used to keep the system running. One of these programs is one getty process per serial port.

co:2345:respawn:/sbin/getty ttyS0 CON9600 vt102 respawn

|

Re-run the program if it dies. We want this to happen so that a new login prompt will appear when you log out of the console.

/sbin/getty ttyS0 CON9600 vt102

|

In this case, we're telling the program called getty to connect to /dev/ttyS0 using the settings for CON9600.

This is a line that exists in /etc/gettydefs. This entry represents a terminal running at 9600bps.

The terminal is a later-model VT102 (later than vt100).

The getty program lives in /sbin and is used by /etc/inittab to call the /etc/gettydefs file as follows:

Define co in gettydefs:

# Serial console 9600, 8, N, 1, CTS/RTS flow control

co# B9600 CS8 -PARENB -ISTRIP CRTSCTS HUPCL # B9600

SANE CS8 -PARENB -ISTRIP CRTSCTS HUPCL #@S @L login: #co

|

This means that the console is a serial console running at 9600 baud rate, 8 bits, No parity, 1 stop bit and carrier and receive flow control set up.

If the line does not manage to handshake then it refers to the end of line label of where to try next, in the case above it is looking at "co" again.

If you check the man pages you can find out all the labels that you can use in a gettydefs, definition.

As long as you define each label in the inittab correctly and then follow it up with a corresponding entry in the gettydefs file you can redefine any handshake between, terminals, consoles, printers and any other serial devices.

Each configuration line has the syntax:

<label>#<initial_flags>#<final_flags>#<login_prompt>#<next_label>

|

The <label> is referred to on the getty command line.

The <next_label> is the definition used if a RS-232 Break is sent. As the console is always 9600bps, this points back to the original label.

<initial_flags> are the serial line parameters used by getty.

<final_flags> are the serial line parameters set by getty before it calls login. You will usually want to set a 9600bps line, SANE terminal handling, eight data bits, no parity and to hang up the modem when the login session is finished.

The <login_prompt> for serial lines is traditionally the name of the machine, followed by the serial port, followed by login: and a space. The macro that inserts the name of the machine and the serial port may vary.

When using a modem, you will have top investigate and ultimately use the uugetty program. This program does file lock checking.

Modem entries in /etc/gettydefs

38400# B38400 CS8 # B38400 SANE -ISTRIP HUPCL #@S @L @B login: #19200

19200# B19200 CS8 # B19200 SANE -ISTRIP HUPCL #@S @L @B login: #9600

9600# B9600 CS8 # B9600 SANE -ISTRIP HUPCL #@S @L @B login: #2400

2400# B2400 CS8 # B2400 SANE -ISTRIP HUPCL #@S @L @B login: #1200

1200# B1200 CS8 # B1200 SANE -ISTRIP HUPCL #@S @L @B login: #300

300# B300 CS8 # B300 SANE -ISTRIP HUPCL #@S @L @B login: #38400

|

Add the following line to your /etc/inittab, so that uugetty is run on your serial port (use the information pertaining to your environment):

1:456:respawn:/sbin/uugetty ttyS1 F38400 vt100

|

The first lines in the preceding extract are typical for the system console. They set many initial and final flags that control how the console behaves.

console# B19200 OPOST ONLCR TAB3 BRKINT IGNPAR ISTRIP IXON IXANY

PARENB ECHO ECHOE ECHOK ICANON ISIG CS8 CREAD # B19200 OPOST ONLCR

TAB3 BRKINT IGNPAR ISTRIP IXON IXANY PARENB ECHO ECHOE ECHOK ICANON

ISIG CS8 CREAD #Console Login: #console

|

![[Note]](../images/admon/note.png) | Note |

|---|---|

This entry would be one line in the /etc/gettydefs file, but because of display and printing issues we have entered linebreaks. | |

You can use the term utility from within an X terminal window. X (or XFree86 with most versions of Linux) enables you to open a window specifically to run term.

Most of the X connection handling is with a utility called txconn. You must execute the txconn program on the remote machine (connected over a network, as X doesn't work with any reasonable degree of speed over a modem) and place it in the background as a daemon. When txconn goes to the background, it returns a message containing a display number that identifies the process:

Xconn bound to screen 11

|

When you connect to the remote txconn daemon from an X window, you use this number to identify the screen. You identify the screen by using the DISPLAY environment variable. If the binding were to screen 11, as shown in the preceding message, you would set the variable to

setenv DISPLAY remotename:11

|

Where remotename is the name of the remote machine (for the C shell). With the Bourne or Korn shell, you set the same environment variable with the following commands:

DISPLAY=remotename:11

export DISPLAY

|

When the term client is started in the local X window, it will connect to screen 11 on the remote machine. txconn knows about screen 11 on the remote so all X instructions will be transferred to the local machine's X window.

You can run the local machine with windows opening on the remote system's X session using txconn, but a better approach is to use the tredir command.

Running X sessions over a modem using txconn is possible, although the high amount of traffic X involves can bring even the fastest modem to a crawl. A local area network connection has enough speed to sustain X window traffic. A low-overhead version of X called LBX is available for some platforms that may help solve the overhead problem for modems. Also useful is a utility called sxpc, which compresses X protocol packets for transmission over modems. You can get sxpc with some versions of term, and it has worked well with 14.4kbps and higher speed modems, although performance is predictably slow.

Check gettydefs(5), stty(1), termio(3), agetty(8), mgetty(8), setserial(8) for further insight.

"Basic Concepts About Termcap and Terminfo quoted from www.die.net

The file /etc/termcap is a text file that lists the terminal capabilities. Several applications use the termcap information to move the cursor around the screen and do other screen-oriented tasks. tcsh, bash, vi and all the curses-based applications use the termcap database.

The database describes several terminal types. The TERM environment variable selects the right behaviour at run-time, by naming a termcap entry to be used by applications.

Within the database, each capability of the terminal appears as a two-letter code and a representation of the actual escape sequence used to get the desired effect. The separator character between different capabilities is the colon (":"). As an example, the audible bell, with code "bl", usually appears as "bl=^G". This sequence tells that the bell sound is obtained by printing the control-G character, the ASCII BEL.

In addition to the bl capability, the vb capability is recognized. It is used to represent the "visible bell". vb is usually missing in the linux entry of the termcap file.

Most modern applications and libraries use the terminfo database instead of termcap. This database uses one file per terminal-type and lives in /usr/lib/terminfo; to avoid using huge directories, the description of each terminal type is stored in a directory named after its first letter; the linux entry, therefore, is /usr/lib/terminfo/l/linux.

To build a terminfo entry you'll ``compile'' the termcap description; refer to the tic program and its manual page.

Terminfo (formerly Termcap) is a database of terminal capabilities and more.

For every (well almost) model of terminal it tells application programs what the terminal is capable of doing. It tells what escape sequences (or control characters) to send to the terminal in order to do things such as move the cursor to a new location, erase part of the screen, scroll the screen, change modes, change appearance (colours, brightness, blinking, underlining, reverse video etc.). After about 1980, many terminals supported over a hundred different commands (some of which take numeric parameters).

One way in which terminfo gives the its information to an application program is via the "ncurses" functions that a programmer may put into a C program. Some programs get info directly from a terminfo files without using ncurses.

Included in the terminfo are often a couple of initialisation strings, which may change the appearance of the screen, change what mode the terminal is in, and/or make the terminal emulate another terminal.

However this is not done automatically, one might expect that the getty program should do this but if it did, one could make a change to the set-up at the terminal and this change wouldn't be happen because the init string would automatically cancel it.

To force an intialisation you will use commands given on the command line (or in a shell script such as /etc/profile) to send the init strings - "tset", "tput init", or "setterm -initialise".

Sometimes there is no need to send the init strings since the terminal may set itself up correctly when it is powered on (using options/preferences one has set up and saved in the non-volatile memory of the terminal). Most dumb terminals have an entire setup sequence sort of like the CMOS of a PC, and it is here that you can set the hardware side of the handshake.

For the Debian Distribution of Linux, several commonly used terminals (including the monitor-console) are in the ncurses-term package. These are put into /etc/terminfo/. All of the terminals in the database are in the ncurses-bin package and go into /usr/share/terminfo/.

See the man pages: terminfo(5) or termcap(5) for the format required to create (or modify) the source files.

The data in the source files is compiled with the "tic" program (Also capable of converting between termcap source format and terminfo format).

The installation program which was used to install Linux probably installed the compiled files on your hard disk so you don't need to compile anything unless you modify /etc/termcap (or terminfo.src ).

"tic" will automatically install the resulting compiled files into a terminfo directory ready to be used by application programs.

In order to save disk space, one may delete all of the terminfo database except for the terminals types that you have (or might need in the future). Don't delete any of the termcaps for a "Linux terminal" (the console) or the xterm ones if you use X Window. The terminal type "dumb" may be needed when an application program can't figure out what type of terminal you are using. It would save disk space if install programs only installed the terminfo for the terminals that you have and if you could get a termcap for a newly installed terminal over the Internet in a few seconds.

We have discussed the environment variables prior to this course. The Environment variable TERM should be set to the name of terminal you are using.

This name must be in the Terminfo data base.

Type "set" at the command line to see what TERM is set to (or type: tset -q).

At a console (monitor) TERM is set to "linux" which is the PC monitor emulating a fictitious terminal model named "linux". Since "linux" is close to a vt100 terminal and many text terminals are also, the "linux" designation will sometimes work as a temporary expedient with a text terminal.

If more than one type of terminal may be connected to the same port (/dev/tty...) then TERM needs to be set each time someone connects to the serial port. There is often a query escape sequence so that the computer may ask the terminal what type it is. Another way is to ask the user to type in (or select) the type of terminal s/he is using.

You may need to use tset for this or write a short shell script to handle this.

The .profile login script is executed and contains within it the following statement: eval `tset -s ?vt100`. The user is then asked if they are using a vt100 and either responds yes or types in the actual terminal type they are using. Then tset sends the init string and sets TERM to this terminal name (type).

![[Note]](../images/admon/note.png) | Note |

|---|---|

Terminal emulation and the gettydefs file layout always remind me how flexible a Unix derived system actually is. You can change anything you want or need to change to make things work and these sections are a good portrayal of that fact. | |

There are warnings as well, no system this powerful can be changed with no thought as to how the change may affect other sections of the operating system, as you can see they are linked and intricately so.

There are 6 virtual terminals in Linux, available by using Alt-F1 through Alt-F6.

There are normally 6 terminals available in X also, F7 through F12. If an X session is started from F1 and you also have an active session on F2, you can type Ctrl-Alt-F2 to go from the X session to the virtual console on F2. Also to get back to your X session, you can type Ctrl-Alt-F7.

The above paragraph assumes that your terminals are set up in the standard manner with 6 virtual terminals available, all that spawn the getty program.

Check the /etc/inittab file for the following lines:

1:2345:respawn:/sbin/mingetty tty1

2:2345:respawn:/sbin/mingetty tty2

3:2345:respawn:/sbin/mingetty tty3

4:2345:respawn:/sbin/mingetty tty4

5:2345:respawn:/sbin/mingetty tty5

6:2345:respawn:/sbin/mingetty tty6

|

![[Note]](../images/admon/note.png) | Note |

|---|---|

mingetty and fbgetty are used most often on the consoles for Linux (as long as you are not using a real text terminal). (See fbgetty). | |

Each virtual terminal uses approx 8KB of kernel memory. As per performance tuning examples allocate fewer virtual terminals if this amount of kernel memory is affecting the performance. (see table below, "off" instead of "respawn")

1:2345:respawn:/sbin/mingetty tty1

2:2345:off:/sbin/mingetty tty2

3:2345:off:/sbin/mingetty tty3

4:2345:off:/sbin/mingetty tty4

5:2345:off:/sbin/mingetty tty5

6:2345:off:/sbin/mingetty tty6

|

When the screen goes "insane" say after you read a binary file with the command "cat" e.g. /etc/wtmp.

$ reset

|

As you type the word reset you may not be able to see it on your screen, just continue typing though and enter. (We used to use "J stty sane ^J" for serial terminals in the old days.)

"The screen program allows you to run multiple virtual terminals, each with its own interactive shell, on a single physical terminal or terminal emulation window. Even if you use Linux virtual consoles or multiple xterm windows, it is worth exploring screen for its rich feature set, which includes:

scrollback history,

copy-and-paste,

output logging,

digraph entry, and

the ability to detach an entire screen session from your terminal and reattach it later.

If you frequently log on to a Linux machine from a remote terminal or using a VT100 terminal program, screen will make your life much easier with the detach feature.

You are logged in via a dialup connection, and are running a complex screen session with editors and other programs open in several windows.

Suddenly you need to leave your terminal, but you don't want to lose your work by hanging up.

Simply type ^A d to detach the session, then log out. (Or, even quicker, type ^A DD to have screen detach and log you out itself.)

When you log on again later, enter the command screen -r, and screen will magically reattach all the windows you had open. "

Table of Contents

We have logged in and we have access to the system, either as root or as a user.

In order to continue we need to get more familiar with the workings of the kernel in relation to the operating system, the user and the hardware.

Let's look at the diagram that we used in the Fundamentals course explaining the layered structure and workings of the operating system. We also used a version of this diagram before in chapter 3.

![[Note]](../images/admon/note.png) | Note |

|---|---|

We have covered the important part of the hardware knowledge required here in the "history" section above - please re-read if unsure as understanding of that level is important. | |

Remember that the kernel controls:

System call facility that allows processes to use kernel functions.

Process creation and tracking

Process priority control

Swapping pages

IPC - inter-process communication

Cache, Buffer and I/O management

File creation, removal and modification and permissions

Multiple filesystems

Log file data accumulation

So the kernel itself is a process manager, what does that mean to the user running a command in the Applications layer and wanting a result immediately displayed on the terminal screen.

Executing a program in userland or user-mode does not mean that the program can access the kernel in any way. If the program is executed in kernel mode there are no restrictions to the kernel.

We have already discussed how each CPU has its own instruction set to switch from User to Kernel modes and then to return from kernel to user mode.

A user executing a request will only need to access kernel mode if the requested service is a kernel provided service. It accesses the kernel service through something called a system call (mentioned in Fundamentals course)

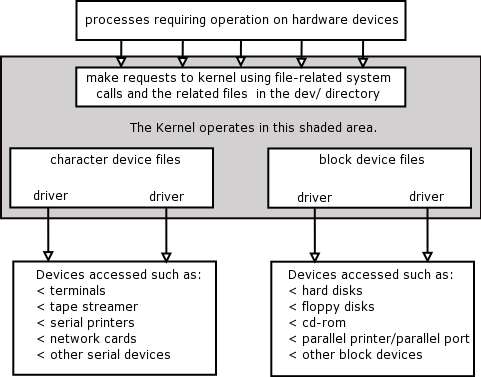

A user wants to access a regular file or a directory file, the user is issuing a command (Application Layer or Standard Library of Utilities Layer), but in order to get the information required the hard disk is going to have to be accessed, and the hard disk is defined as a block device. Therefore it has a block device file in /dev and has to be accesses through the kernel.

PREVIOUS EXPLANATION (Fundamentals): "The procedures in the Standard Library are called, and these procedures ensure that a trap instruction switches from User mode into Kernel mode and that the kernel then gets the control to perform the work requested of it."

Let's look at some of the relevant system calls (Standard Library of Procedures) that would have to take place: switch()

switch () -- A switch has to be made from user to kernel mode - switch() is a TRAP written in C in order to be able to talk to the assembler code needed to talk to the hardware device. switch() is a system call.

open() --Now the file has to be opened, as a process could not access a file in any other way. The system call, open(), is structured as follows:

fd = open(path, flag, mode)where:

fd= a file descriptor and an open file object are created, the file descriptor links the process and the opened file and the object contains the relevant data for the link such as a pointer to the kernel memory being used, a current position or offset from where the next function that has to be performed on that file, where the file actually is, and even a pointer to the other functions specified by the flag field defined below.

path= the pathname of where the file is to be found

flag= how the file must be opened, or if it must be created (read, write, append)

mode= access rights of newly created file

flock() -- As more than one user could be accessing the same file there is an flock() system call which allows file operation synchronisation on the entire file or just parts of the file.

creat() -- If the file does not exist it will need to be created and this is the function name for that system call. (Handled the same as open() by the kernel.)

read() write() -- Device files are usually accessed sequentially (regular files either randomly or sequentially.) From the current pointer or offset a read() or write() can be performed. Nread/nwrite specifies the number of characters read /written and updates the offset value.

lseek(), close(), rename() and unlink() -- To change the value of the offset the kernel will use lseek().

close(fd) -- To close a file the syntax would be close(fd) using the file descriptor name

rename(old,new) -- To rename = rename(old, new)

unlink() -- To remove = unlink(pathname) this one may make more sense a little later in this course, but this will change the parent directory count and list.

PREVIOUS EXPLANATION (Fundamentals): "Once the kernel has performed the task, it will return a successful or a failure status and then instigates a return from the trap instruction back into user mode. exit()

In the case of the exit() system call we would hope that the brackets would contain a zero (0) to represent a successful completion of a process.

A program is an executable file on the hard disk, whereas a process is a running program.

A process is an instance of a disk program in memory, executing instructions on the processor.

The only way to run a program on a Unix/Linux system is to request the kernel to execute it via an exec() system call.

Remember that the only things that can make system calls are processes (binary programs that are executing.)

So how do you as a human get the kernel to run a program for you? The shell acts as your gateway to the kernel! You use the shell to make system calls to the kernel on your behalf in fact, the shell is simply an interface for you to get access to the kernel's exec() system call.

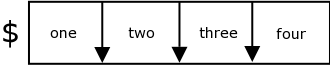

When you type in a command line to the shell, the shell parses your input in a certain way. Let's take a look at how the shell does this, say you type in a few arbitrary words as follows:

$ one space three four

|

The shell will parse this line into separate fields where each field is separated by an IFS or Internal Field Separator character, which is by default set to whitespace (any combination of spaces and/or tabs.)

Refer to the next diagram.

In a simple command line (no pipes) the shell will regard the first field as the name of a command to run.[2]

All the remaining fields are seen as command line arguments to pass to that command. The shell determines whether it knows the command as a built-in, or an external program on the disk, as found in the first matching directory in the PATH variable.

If the command is a shell built-in, the shell just runs a function within its existing program in memory, with the same name as the built-in command, and passes the arguments from the command line as arguments to the function.

If the command is an external program on the disk (binary or shell script,) the shell will perform an exec() system call, and specify the path to the program and the command line arguments, in the parenthesis of the exec() system call. For example if you type the following command at the shell prompt

$ ls -al

|

the shell will run code similar to the following:

execle("/bin/ls", "ls", "-al", "TERM=linux,LOGNAME=joe, ")

|

As you can see the shell has simply given you access to the kernels exec() system call.

The creation of a process, through the exec() system call, is always performed through an existing process. The kernel keeps track of which process created another. You can use "ps" to show the kernels process table, or excerpts thereof, to determine a process' process ID (PID) and parent process ID (PPID.)

If you run the following command

linux:/ # ps -f

UID PID PPID C STIME TTY TIME CMD

root 4421 4411 0 15:49 pts/7 00:00:00 su

root 4422 4421 0 15:49 pts/7 00:00:00 bash

root 4429 4422 0 15:50 pts/7 00:00:00 ps -f

|

You will see all the processes started in your current login session. This is just a small excerpt from the kernels entire process table. Look at the PPID column of the ps command that you ran above. Can you see that the PPID of ps is the same as the PID of your shell process (we'll assume "bash")?

Now make a note of that shell's PPID. Let's try and find its parent (I assuming that you are in your login shell and that you have not started any sub-shells by hand.) Now run the following command:

$ ps -ef | less

F S UID PID PPID C PRI NI ADDR SZ WCHAN TTY TIME CMD

0 S 0 1 0 0 75 0 - 155 schedu ? 00:00:04 init

0 S 0 2 1 0 75 0 - 0 contex ? 00:00:00 keventd

0 S 0 3 1 0 94 19 - 0 ksofti ? 00:00:00 ksoftirqd_CPU0

0 S 0 4 1 0 85 0 - 0 kswapd ? 00:00:00 kswapd

0 S 0 5 1 0 85 0 - 0 bdflus ? 00:00:00 bdflush

0 S 0 6 1 0 75 0 - 0 schedu ? 00:00:00 kupdated

0 S 0 7 1 0 85 0 - 0 kinode ? 00:00:00 kinoded

0 S 0 8 1 0 85 0 - 0 md_thr ? 00:00:00 mdrecoveryd

0 S 0 11 1 0 75 0 - 0 schedu ? 00:00:00 kreiserfsd

0 S 0 386 1 0 60 -20 - 0 down_i ? 00:00:00 lvm-mpd

0 S 0 899 1 0 75 0 - 390 schedu ? 00:00:00 syslogd

0 S 0 902 1 0 75 0 - 593 syslog ? 00:00:00 klogd

|

Look in the PID column for the same number that you saw in your shell's PPID column. The process that you find will be the parent of your shell. What is it? It is the login program. Using the same methodology as before now find Login's parent. It is "init". Now find "init's" parent. Can you see that "init" has no parent in the process table?

Who or what started "init", the kernel!

Remember that "init" is the first process run by the kernel at bootup: this behaviour is hard-coded in the kernel. It is "init's" job to start up various child processes to get the system to a usable state (Refer to bootup section Refer to init section.)

What constitutes a process or the properties of a process?

A process consists of:

An entry in the process table

Data area, etcetera uarea (contains the properties of the process)

The properties of a process

A process has many status properties maintained by the kernel, some of which are:

RUID: Numeric real (login) user ID

RGID: Numeric real (login) group ID

EUID: Numeric effective user ID

EGID: Numeric effictive group ID

PID: Numeric proces ID

PPID: Numeric parent process ID

When a process is started it inherits most of the properties of its parent, such as the real and effective UID/GID values.

Every process also has an environment associated with it. The environment is simply a list of variables. These are passed to a process by it's parent process, when it makes the exec() system call.

Every UNIX kernel has one or more forms of the exec() system call. Although we generally refer to "exec()", the exact call names may differ slightly, but they all start with "exec".

On Linux, the kernel's variant of the traditional exec() system call is called execve(2), and its syntax is:

execve(program_file, program_arguements, environment_variables)

|

The standard C library on Linux provides several different interfaces to the low-level execvp() system call, all providing slightly different behaviour. These library calls are:

execl(3)

execlp(3)

execle(3)

execv(3)

execvp(3)

To see the difference between these calls, you can look up the man pages for them in the relevant manual sections.

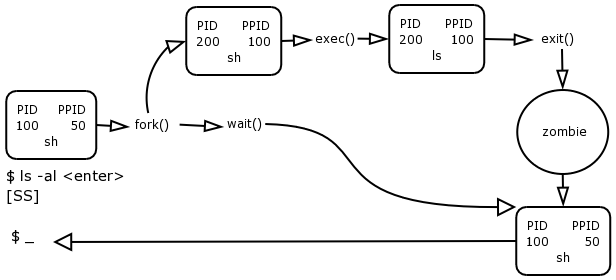

Although the basic way to run a process is through exec(), the whole process creation effort is a bit more involved. When a C programmer wants to start a process there are a few more system calls that will usually be used together with exec(). To better explain this we will look at a process creation example, namely that of running an "ls" command from the shell.

When you type in "ls -a" the shell does the following:

As you hit enter after the "ls -al" command, the shell determines that ls is an external program on the filesystem, namely /bin/ls. It needs to exec() this. What it does first is to issue a fork() system call. It then issues a wait() system call.

The fork() system call performs a type of cloning operation. This gets the kernel to copy an existing process table entry to a next empty slot in the process table.

This effectively creates a template for a new process.

The kernel now assigns a new unique PID to this forked process and updates its PPID to reflect the value of the process that forked it. The forker is called the parent process and the forked process is called the child process.

The parent process, in this case the shell, now issues the exec() system call on behalf of the child. The exec() system call gets the kernel to read the ls program off the filesystem on the hard disk and place it into memory, overwriting the calling process, in this case the child shell template.

The PID and PPID entries of the forked child remain the same, but the name of the child process in the process table entry is now updated to the name of the exceed process, in this case ls.

The child now runs and in the case of "ls -al", produces some output to the terminal. Once the child is finished whatever it has to do it informs the kernel that it has completed by issuing an exit() system call.

The wait() causes the parent to halt execution until the child performs its' exit().

The exiting child now falls into a "zombie"* state. The kernel has de-allocated the process memory, however its process table entry still exists. It is the job of the parent to inform the kernel that it has finished working with the child, and that the kernel can now remove the process table entry from the child (currently in the zombie state)[3]

The exit() of the child actually causes the return of the wait() system call, which ends the pausing of the parent process, so that it can now continue running.

It is important to note that every process becomes zombie for a brief amount of time when it exits, usually a split second, as a part of its natural life cycle.

Question:

What would happen if shell omitted the wait()?

Answer:

You would get the shell prompt back, the child process would continue to run until completed and if the parent shell still exists it will still receive the exit status from the child process and would still have the task of informing the kernel that the child process is complete. So that the kernel can remove the child's entry from the process table.

To show the state of a process, use the "-l" to the ps command.

Example

$ ps -el

F S UID PID PPID C PRI NI ADDR SZ WCHAN TTY TIME CMD

0 S 0 1 0 0 75 0 - 155 schedu ? 00:00:04 init

0 S 0 2 1 0 75 0 - 0 contex ? 00:00:00 keventd

0 S 0 3 1 0 94 19 - 0 ksofti ? 00:00:00 ksoftirqd_CPU0

0 S 0 4 1 0 85 0 - 0 kswapd ? 00:00:00 kswapd

0 S 0 5 1 0 85 0 - 0 bdflus ? 00:00:00 bdflush

0 S 0 6 1 0 75 0 - 0 schedu ? 00:00:00 kupdated

0 S 0 7 1 0 85 0 - 0 kinode ? 00:00:00 kinoded

0 S 0 8 1 0 85 0 - 0 md_thr ? 00:00:00 mdrecoveryd

0 S 0 11 1 0 75 0 - 0 schedu ? 00:00:00 kreiserfsd

0 S 0 386 1 0 60 -20 - 0 down_i ? 00:00:00 lvm-mpd

0 S 0 899 1 0 75 0 - 390 schedu ? 00:00:00 syslogd

|

Look for the column heading "S" (it is the second column)

| symbol | meaning |

|---|---|

| S | sleeping |

| R | running |

| D | waiting (usually for IO) |

| T | stopped (suspended) or traced |

| Z | zombie (defunct) |

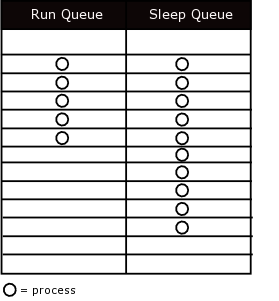

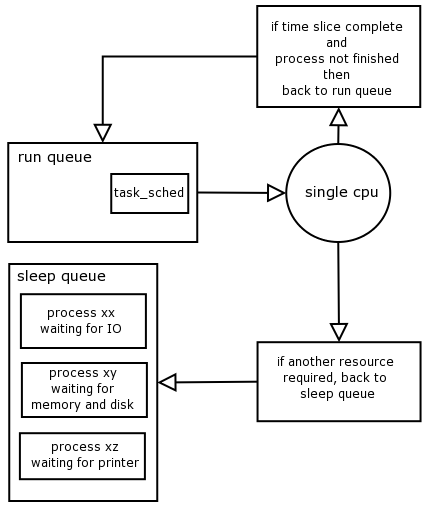

The scheduler is a service provided by the kernel to manage processes and the fair distribution of CPU time between them.

The scheduler is implemented as a set of functions in the kernel. On Unix System V**, the scheduler is represented in the process table as a process named sched, with a PID of 0. Linux does not indicate the scheduler in this way. Even on system V this serves no practical purpose as not even the root user can manipulate the scheduler by sending it signals with the kill command.[4]